How to Earn LLM Citations to Build Traffic & Authority

At first glance, it looks like only big brands get cited in AI answers. In the top 10 cited domains across all major LLMs, you’ll find sites like Wikipedia, Reddit, Mayoclinic, Quora, Healthline, and Amazon. But these brands aren’t...

Article Performance

Data from Ahrefs

The number of websites linking to this post.

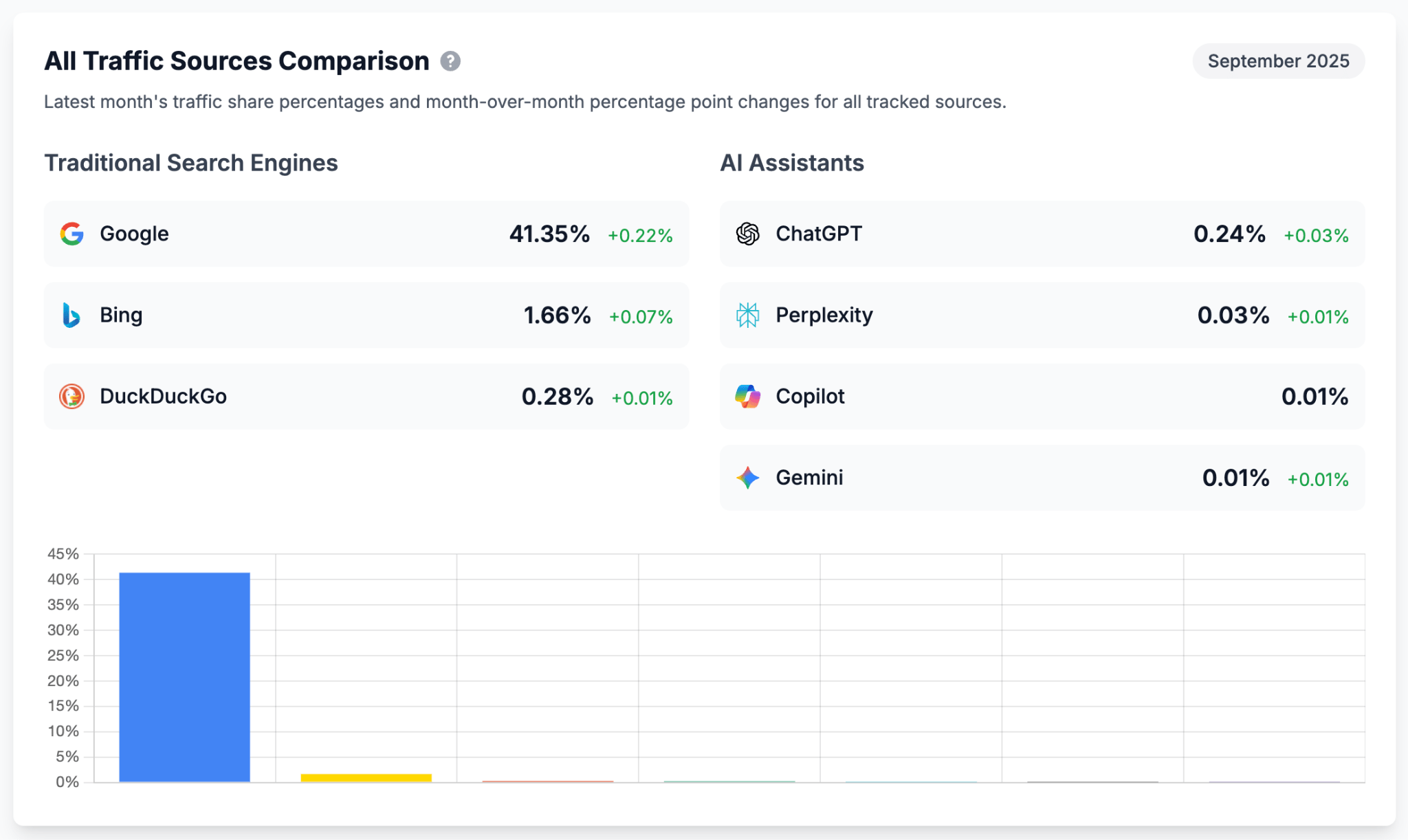

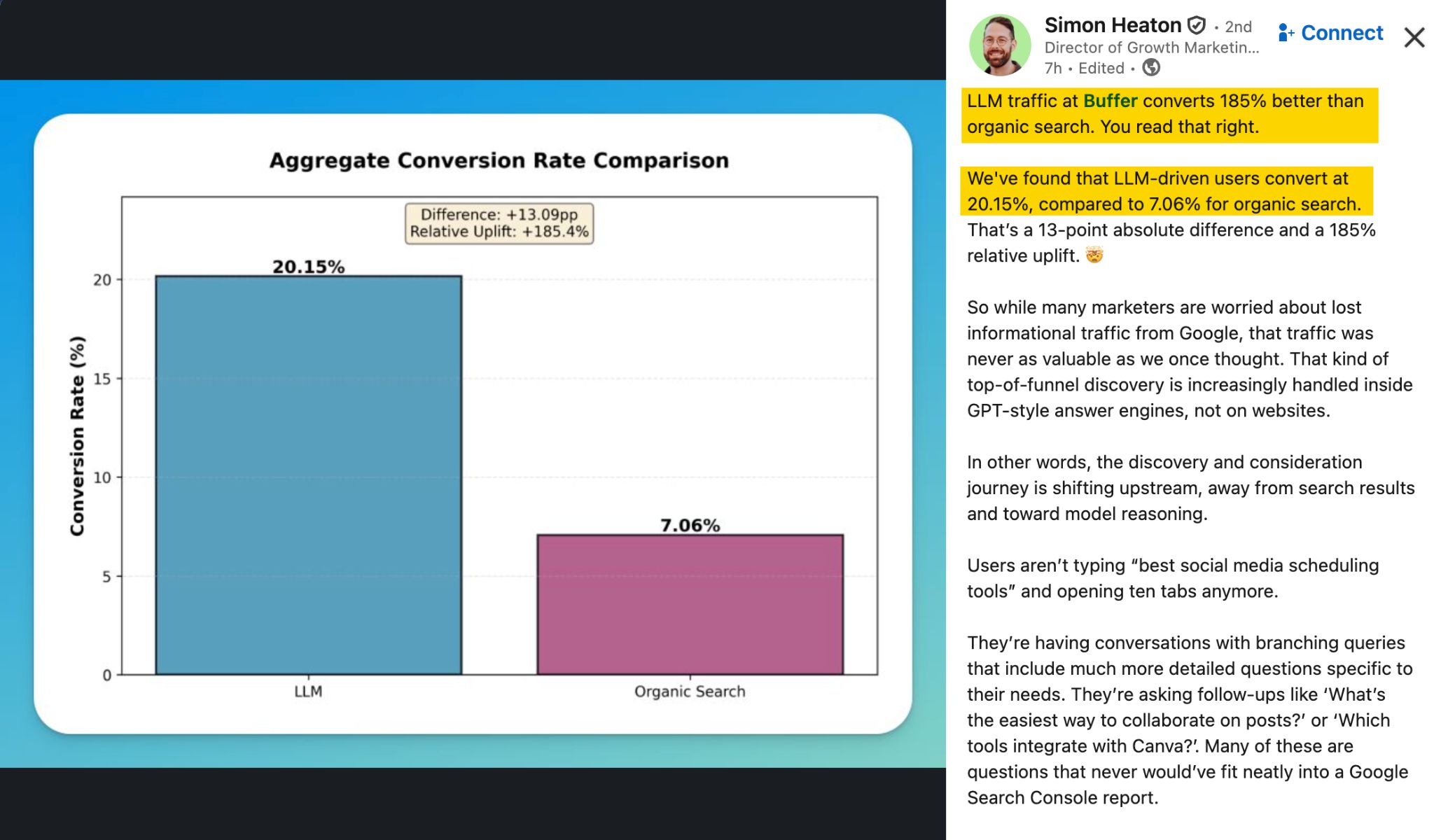

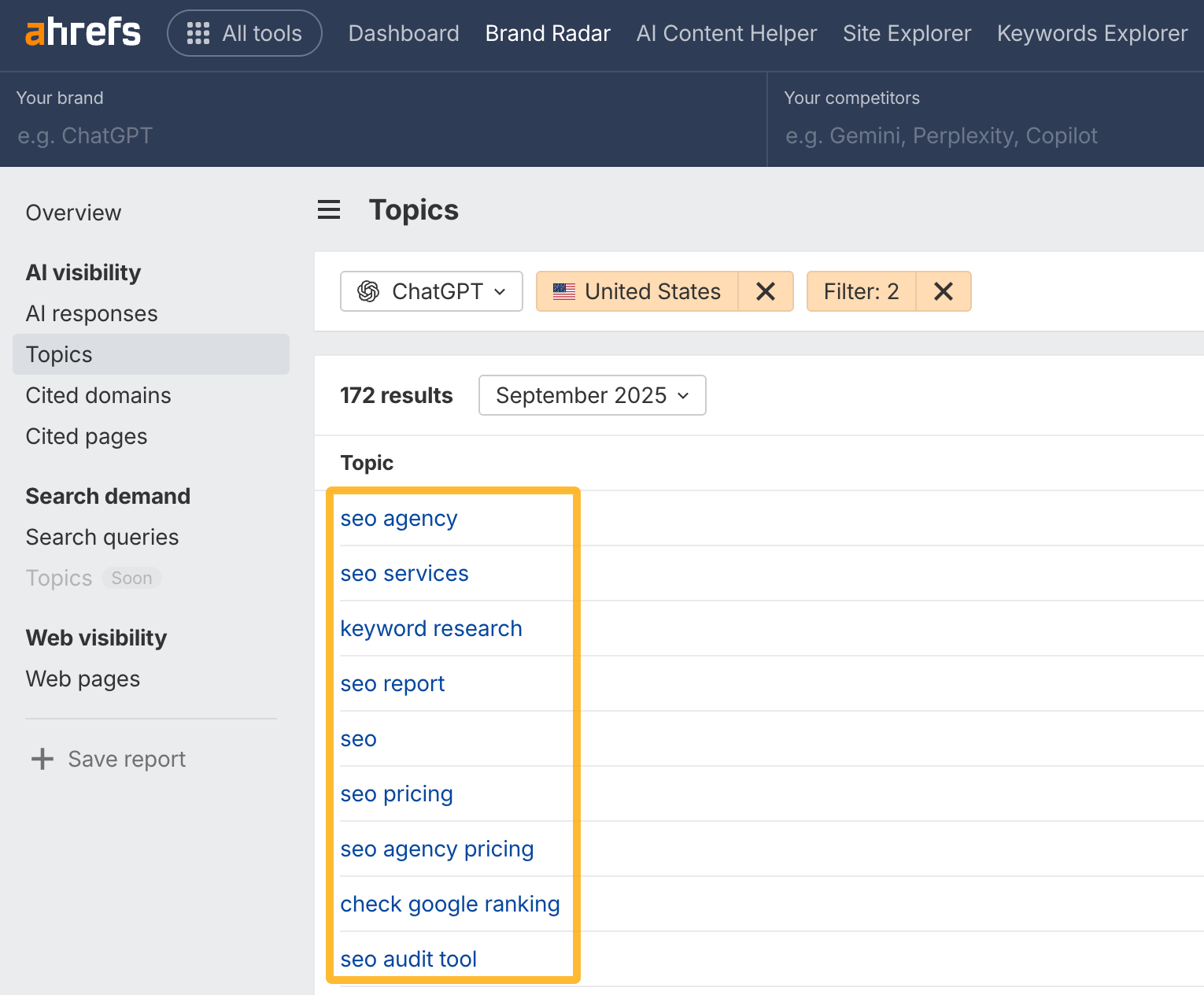

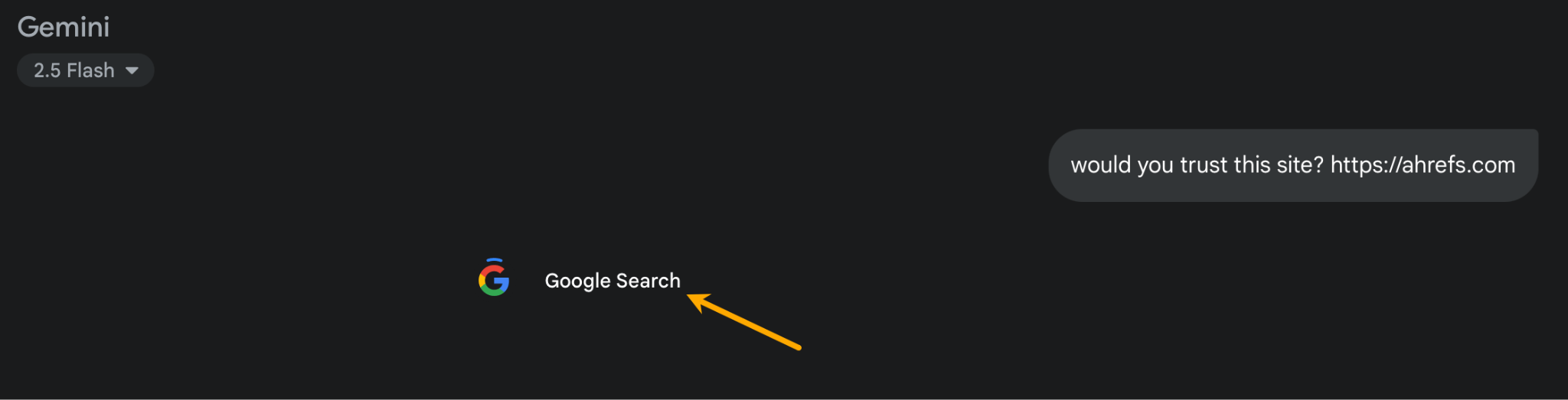

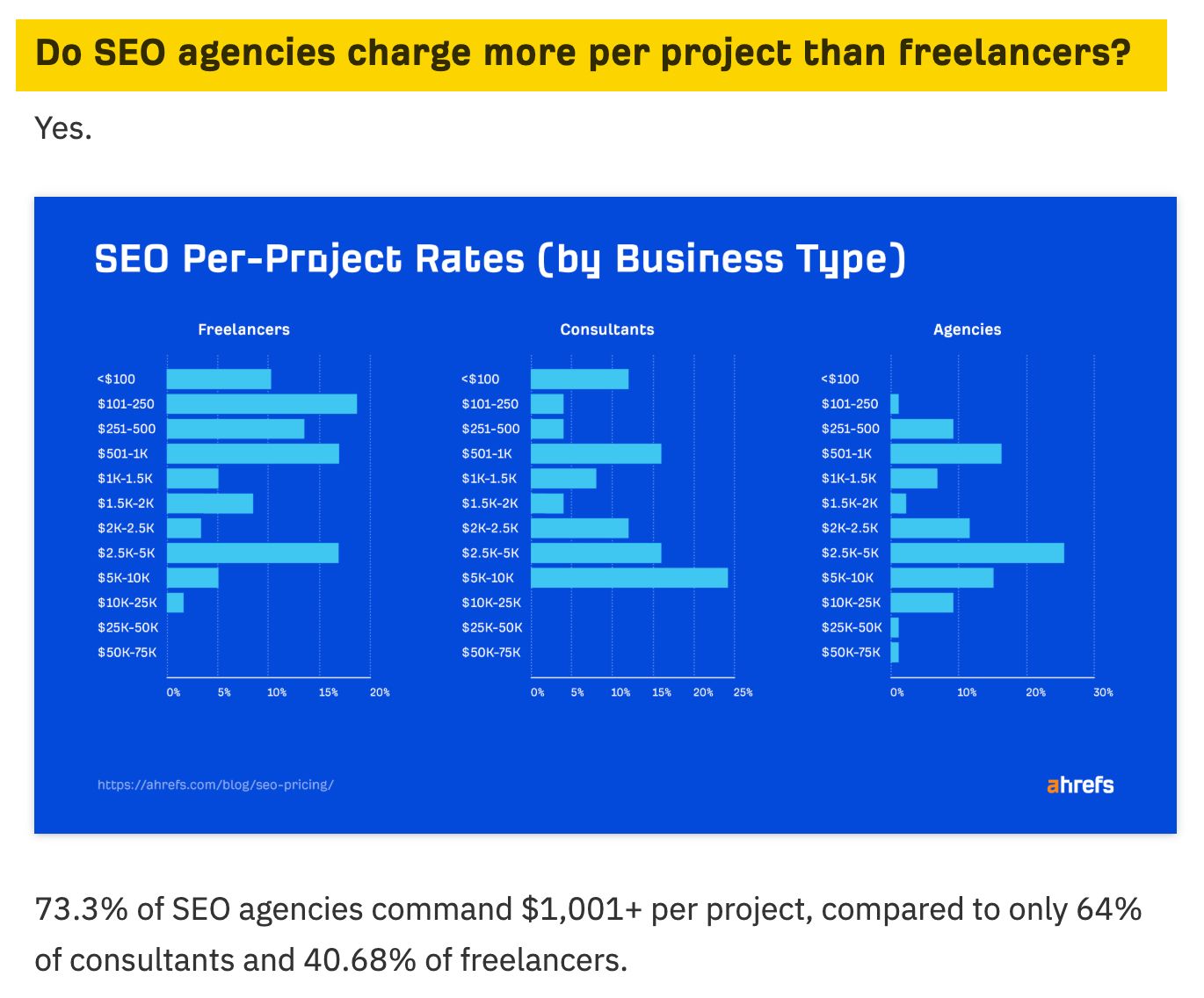

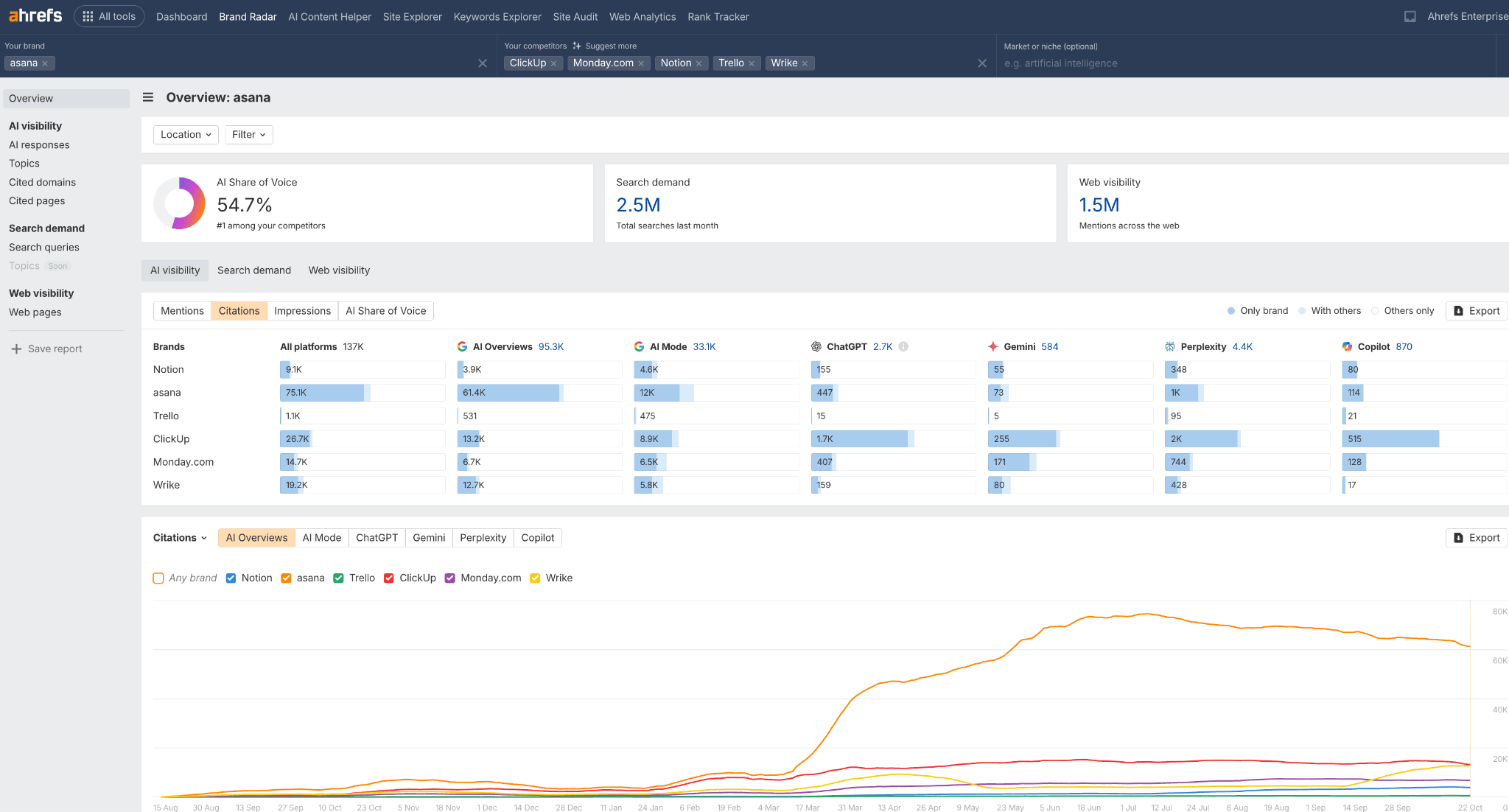

This post's estimated monthly organic search traffic.

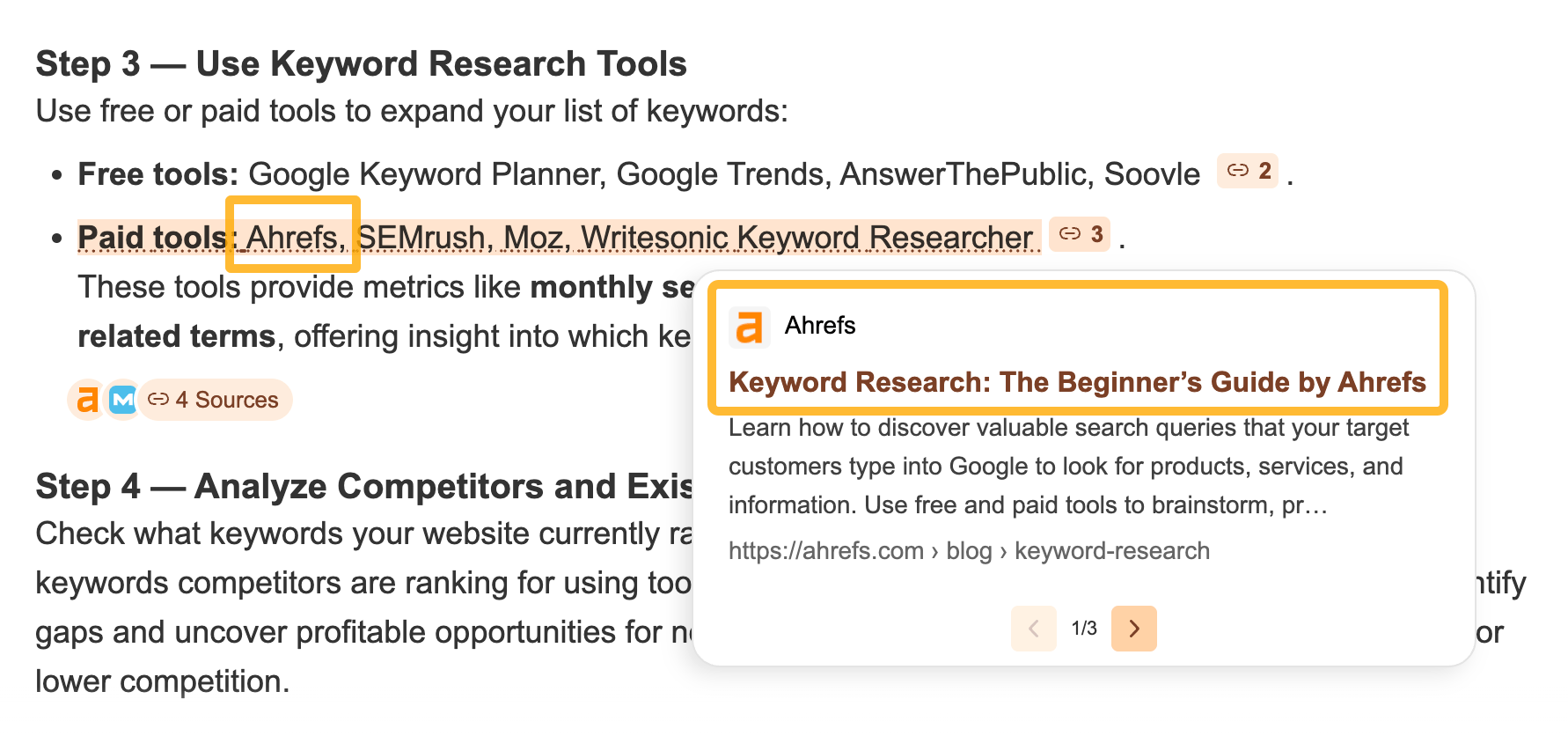

Ask ChatGPT, “How much does SEO cost?” and you’ll likely see Ahrefs cited as a source. Ask Claude about email marketing benchmarks, and Mailchimp’s data appears. Search Perplexity for project management tips, and Asana’s guides get referenced. At first glance, it looks like only big brands get cited in AI answers. In the top 10 cited domains across all major LLMs, you’ll find sites like Wikipedia, Reddit, Mayoclinic, Quora, Healthline, and Amazon. But these brands aren’t cited just because they’re famous. They’re cited because their content hits specific marks, which I’ll explain in this article. The real question isn’t whether you can outdo Wikipedia on broad topics. It’s whether you can become the trusted expert in your specific niche—the source that language models rely on when people ask about your field. LLM stands for “Large Language Model”—that’s the technology behind AI chatbots like ChatGPT, Claude, Gemini, and Perplexity. When we talk about “LLM citations,” we mean when these AI tools reference your website as a source in their answers. But not all references are created equal. There are two main ways your brand can appear in AI answers. A citation is when the AI attributes information to your content, including a link to your site. These usually appear when someone asks for data, statistics, how-to guides, or information about recent events. The AI pulls facts from your content and credits you as the source (not necessarily mentioning your brand in the main body of the answer). In most AI interfaces, citations appear “in the background”; you’ll find them in a “sources” section, at the bottom of the response, or behind small numbered references you can click. A mention is when your brand or product name shows up in an AI-generated answer. This typically happens when the user asks for product recommendations. For example, if someone asks “What’s the best project management software?”, the AI might list your product in its main response, but without linking to you. Mentions are valuable because they build brand awareness. People see your name, remember it, and might search for you later. Sometimes you get lucky and receive both: your brand is mentioned in the main text and linked in the citations list. These combined appearances are the most valuable because they give you visibility (the mention) plus authority and potential traffic (the citation). While AI citations boost credibility and visibility, they work differently from traditional search. The real value is in immediate traffic and the long-term brand equity you’re building. Let’s be clear: traffic from AI citations is modest. Our data from roughly 60,000 websites shows that all large language models combined account for less than 1% of total traffic, compared to Google Search’s 41.35%. And there’s another wrinkle: citations don’t automatically equal clicks. I analyzed 1,000 of the most-cited pages from ahrefs.com, and only about 10% also ranked among the top pages getting traffic from ChatGPT. Most traffic went to practical resources—homepages, product pages, and free tools. But here’s why this traffic still matters: quality over quantity. The visitors who do click through typically have strong intent. They’ve already seen a summary but want more depth, which suggests genuine interest in your content. When those visitors convert, the results can be exceptional. Buffer reported conversion rates 185% higher than organic search, while we’ve seen rates up to 23 times higher. Also, this traffic serves as a crucial benchmark. It allows you to: Citations don’t always equal traffic When I looked at 1,000 of the most-cited pages from ahrefs.com, only about 10% also ranked among the top pages getting traffic from ChatGPT. Most of that traffic went to the homepage, product pages, and free tools. In other words, being mentioned a lot doesn’t necessarily mean people will click through. And that’s fine. Citations help build authority and brand recognition, even if they don’t drive direct visits. Meanwhile, the pages that do attract the most traffic are usually practical tools or resources that meet users’ immediate needs. When an AI cites your content, it’s essentially telling users: “This source is reliable enough to stake my answer on.” That endorsement builds credibility, even if people never click through to your site. Think about it—if someone asks ChatGPT about SEO pricing and sees your brand cited, you’ve just been positioned as an expert in their mind. That perception matters. For example, here are some of the topics where Ahrefs is cited. The pattern is quite clear—Ahrefs is an authority in SEO. AI tools are expanding quickly. ChatGPT reached 100 million users faster than any other app ever, and now has around 800 million people using it every week. As search behavior shifts toward AI platforms, this is fundamentally about reputation building in a new channel. The brands that appear consistently in AI citations will build the same type of authority that early SEO leaders established in traditional search—they become the trusted names audiences encounter repeatedly when seeking answers. To understand how to get cited, you first need to understand how AI tools actually find and reference content. AI chatbots pull from two different knowledge bases: Here’s the key point: typically, it’s RAG that produces citations. When an AI uses its training data, it doesn’t link to sources right away. But when it searches the web through RAG, it cites the pages it found. So, if you want citations, you need to optimize for AI platforms that actively search the web. Fortunately, most major LLMs now use RAG. AI chatbots typically trigger web searches when: So if your content addresses any of these trigger scenarios—timely information, specialized knowledge, data-backed insights, or authoritative guidance on important topics—you’re already creating the type of content AI tools are programmed to search for and cite. Ask the same question a few times, and you’ll often get slightly different answers with different sources. AI systems use probabilistic models, which means responses can vary even for identical questions. One reason is “temperature”—a parameter that controls how much randomness the model allows when choosing words. At higher temperatures, the AI explores more phrasing options, leading to different answers each time. Other factors, like your location, the specific model version, and even the time of your query, can also affect which sources appear. Companies frequently update their models and retrieval systems, which can further influence citation patterns over time. Additionally, the way you phrase your question matters. Slight variations in wording can lead the AI to prioritize different sources or interpretations, even when asking about the same topic. My personal theory is that since LLMs are trained to satisfy the user’s intent, they sometimes end up “overoptimizing” or “overinterpreting” what’s being asked. When an AI notices that the same question is being asked again, it doesn’t just ignore it; it tries to make sense of why. It might assume the user wasn’t happy with the first answer and adjust its response, much like a human would in the same situation. In general, when you ask an AI assistant a question, the system breaks your prompt into multiple search queries (a process called query fan-out), then uses retrieval-augmented generation (RAG) to fetch relevant content from the web or its index. The AI then synthesizes information from these retrieved results to construct its response. This means citations are chosen because your content showed up in the retrieval process and met certain selection criteria. Research, including our own, on AI Overviews, ChatGPT citations, and other AI assistants, reveals consistent patterns in what gets selected. Our analysis revealed a strong bias toward recently published or frequently updated pages. LLMs appear to weigh freshness as a key factor when selecting sources, particularly for time-sensitive topics. And we’re not the only ones who found this to be true. Another experiment referred to this behavior in LLMs as a “recency bias”. This likely stems from patterns in their training data, where fresh content is often associated with higher relevance and quality. Across seven models, GPT-3.5-turbo, GPT-4o, GPT-4, LLaMA-3 8B/70B, and Qwen-2.5 7B/72B, “fresh” passages are consistently promoted, shifting the Top-10’s mean publication year forward by up to 4.78 years and moving individual items by as many as 95 ranks in our listwise reranking experiments. (…) We also observe that the preference of LLMs between two passages with an identical relevance level can be reversed by up to 25% on average after date injection in our pairwise preference experiments. This likely stems from training data patterns where fresh content correlates with relevance and quality, particularly for time-sensitive topics. From a traditional SEO point of view, websites that get cited by AI tend to have stronger link profiles. When I analyzed the top 1,000 sites most frequently mentioned by ChatGPT, the data showed a clear pattern: AI favors websites with a Domain Rating (DR) above 60, and the majority of citations came from high-authority domains in the DR 80–100 range. But this probably isn’t because LLMs directly evaluate domain authority. More likely, these systems retrieve content that ranks highly for their query fan-out terms, and high-DR sites naturally rank better in search results. The correlation with DR is indirect but strong. AI systems prioritize content that directly addresses the expanded queries generated from user prompts. You can see this with Google’s AI Overviews. When you search for something like “how to know when an avocado is ripe,” the cited sources typically contain exact sentences or paragraphs that answer that specific question. This isn’t about “clarity” in some general sense—it’s about having content that semantically matches what the AI is looking for when it fans out the user’s query into retrievable search terms. Microsoft’s guide on optimizing for AI search confirms what case studies have shown: AI systems favor content with clear meaning, consistent context, and clean formatting. “Clarity is about more than just word choice, it’s how you phrase, format, and punctuate so AI systems can interpret your content with confidence. AI systems don’t just scan for keywords; they look for clear meaning, consistent context, and clean formatting. Precise, structured language makes it easier for AI to classify your content as relevant and lift it into answers.” This makes sense from an LLM perspective—the easier it is to extract a relevant passage, the more likely that passage gets used. Let’s look at a real example: Ahrefs’ “How Much Does SEO Cost?” page is one of our most frequently cited articles. Here’s what, in my opinion, makes it work so well: “How much does SEO cost?” is one of the most frequently asked questions in the industry, and that demand keeps growing. The more popular the question, the more opportunities there are to earn citations. Our page tackles the query right away with a clear, straightforward answer at the top; no filler or long-winded introductions. The title itself—“439 People Polled”—signals original research that can’t be found elsewhere. When AI tools generate answers about SEO pricing, they often cite Ahrefs because it’s the primary source. Survey-based content with a clear sample size builds credibility. It’s not opinion; it’s hard to get data. It’s also best practice to include a date for your data and keep it updated regularly, showing both freshness and reliability. The page includes concrete numbers—percentages, price ranges, and averages—and breaks them down by factors like location or service type. This structured layout makes it easy for AI to extract exactly what it needs for different queries. Importantly, key stats are written in plain text (not buried in images), which helps AI systems access and interpret them. Unlike theoretical SEO advice, this gives actionable numbers people can use for budgeting, negotiating, or setting their own prices. AI assistants are often asked for practical guidance, and this delivers exactly that. Clear headings, distinct sections for each pricing model, comparison tables—everything is organized so both humans and AI can quickly find what they need. The question also has multiple variations (agency pricing, freelancer rates, hourly costs, geographic differences), and the page addresses all of them. This means it can answer dozens of related queries from a single source. The page includes a visible author byline with credentials that establish authority on SEO pricing specifically. It shows someone with direct, relevant experience, instead of a “marketing expert”. What’s more, the data study has been peer reviewed, which adds to the trustworthiness. Let’s get practical. Here are eight proven tactics to increase your chances of being cited by AI tools. Before creating new content for LLMs, research what’s already working in your industry. You can use a tool like Brand Radar to see which pages from your competitors get cited most often. Look for patterns in the content types (guides, research, tools, data pages) and the topics that consistently trigger citations. Here’s how to find all that in Brand Radar: You can also plug in your niche in the top-right filter and get a list of most cited content in that niche. And here’s an example from our own turf. When I analyzed which of our pages get cited most, I found these patterns. Each format gives AI tools something specific they need: structured data, clear definitions, or authoritative processes. Look for topics where competitors are cited but you’re not, or where there’s no great content yet. It’s a quick and easy analysis if you’re using Brand Radar: Scan the list of topics to spot strong content opportunities. The Volume column shows how popular each topic is, while the Brand Mentions column indicates whether your brand is already being referenced for that topic. So, you can focus not only on popular questions, but also on topics with few or no mentions to increase your visibility and reach new audiences. Here are some other ideas. Instead of adding to the noise on crowded topics, create content that you alone can provide, then let others reference you. Something that we’ve found effective at Ahrefs is original research. For example, Ahrefs’ “SEO Pricing” page (one of our most cited pages) works because it’s based on an original survey of 439 people. We’re the primary source of that data. Other ideas: As I mentioned earlier, AI assistants like Perplexity and ChatGPT use Retrieval-Augmented Generation (RAG), meaning they pull real-time information directly from search engines before generating an answer. That makes your visibility in traditional search results the first step toward being cited by AI. If your content doesn’t rank, AI tools are less likely to see and reference it. This is where EEAT comes in. Google rewards pages that show clear signs of credibility and expertise. When your content demonstrates strong EEAT, it’s not only more likely to rank higher, but it also becomes part of the trusted pool of information AI systems rely on when assembling their responses. So the chain looks like this: Optimizing for EEAT includes things like: There are no AI-specific shortcuts for this, as far as I know, and I wouldn’t bet on those who take advantage of AI’s immaturity (because that will change). The same principles that build authority in search also build credibility with AI systems. If you’re new to E-E-A-T, this guide breaks down what it is, why it matters, and how to apply it step by step. When AI tools process a query, they first interpret the user’s intent. If that intent indicates a need for up-to-date information, the system automatically searches for recent sources. This intent can be explicit (with words like “latest,” “current,” or “now”) or implicit, where the topic itself suggests a time-sensitive need, such as ongoing events, trends, or product updates. To meet that intent, AI systems are designed to prioritize newer documents. This approach not only aligns with what users expect but also helps maintain accuracy and trust, since older information on fast-moving topics can quickly become outdated or misleading. Here’s how you can put that into practice: AI systems pull from structured, well-organized pages that clearly communicate what each section covers. The more logically your content is built, the easier it is for both Google and AI models to extract and trust your information. How to structure for both search and AI: The more your content is discussed, shared, and linked across the web, the more likely AI tools are to discover and cite it. Think of it this way: AI tools find content through web searches. Content that appears in more places, gets referenced more often, and shows up in authoritative discussions has more “entry points” for AI to discover it. Here’s how you can amplify for AI search visibility: Once you start optimizing for citations, you need a way to track whether it’s working. Here are your options. Regularly ask relevant questions in ChatGPT, Perplexity, Claude, and Gemini to see if your content appears in citations. For example, you can create a list of 10-20 questions your content should answer, test them monthly across different AI platforms, and document which sources get cited (yours and competitors’). You can also track changes over time using a simple Google Sheet. This method is a bit time-consuming and limited in scope, but it’s free and gives you direct insight into what your audience likely sees in ChatGPT. Use a tool like Ahrefs Web Analytics (available for free in Ahrefs Webmaster Tools) to check where your referral traffic is coming from. In Ahrefs, AI search traffic is already tracked as its own channel, so you don’t need to set up any custom filters or use regex like you might with other analytics tools. While AI traffic is currently small, you can still track trends over time. You can look at which pages receive AI referral traffic from being cited in AI answers and how that traffic behaves (time on page, bounce rate). To view this data, choose AI Search as the channel, then click View more under the list of pages. You can also examine whether the traffic from AI referrals converts. If the goal is reaching a specific page, like a thank-you page or pricing page, simply set up the top-level filters like this: You can track conversions for specific actions, such as sign-ups, downloads, or demo requests. Start by setting up event tracking. Once you’re done with that, use the top-level filters like so: If you want comprehensive tracking without manually testing hundreds of prompts, try Ahrefs’ Brand Radar or a similar tool. Brand Radar monitors your citations across 150 million prompts in six major AI platforms. The features include: Just a quick glance at the dashboard already shows you where you stand against competitors. For example, when we look at Asana’s performance, the data shows Asana heavily dominates in Google’s AI features (AI Overviews and AI Mode), which account for the vast majority of its citations. However, there’s a significant opportunity gap in standalone AI assistants like ChatGPT, Gemini, and Copilot, where citations are much lower. The trend graph shows sustained high visibility over the past few months with a slight recent decline, suggesting the need to maintain momentum. Brand Radar does more than just track citations. It also lets you monitor where and how your brand or products are mentioned, and compare your AI “share of voice” across platforms like Google’s AI Mode, AI Overviews, Gemini, ChatGPT, Copilot, and Perplexity. Citations position your brand as an authority and put your name in front of people at the exact moment they’re researching your industry. While the traffic they generate is modest, the visitors who do click through often show stronger intent and higher conversion rates than traditional search traffic. The brands investing in citation optimization now are claiming authority before the space gets crowded. In two years, when everyone’s fighting for AI visibility, you’ll already be the established source. Got questions or comments? Let me know on LinkedIn.Citations

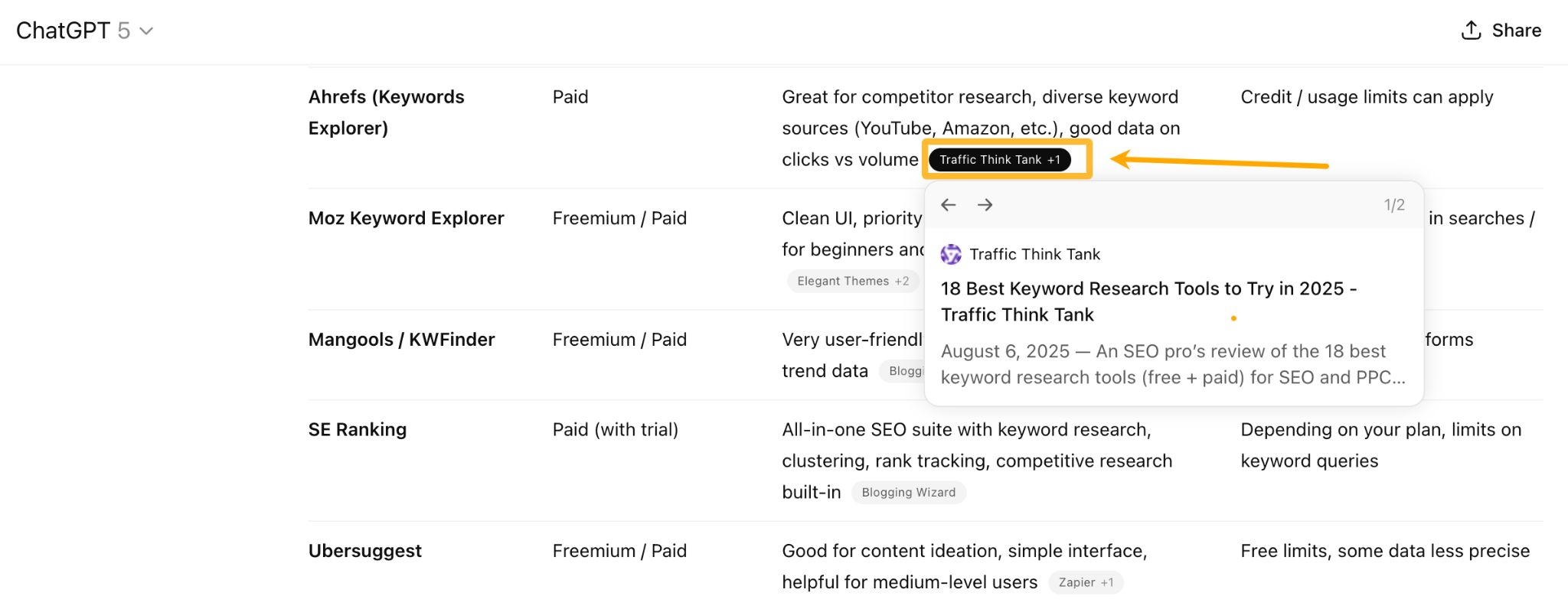

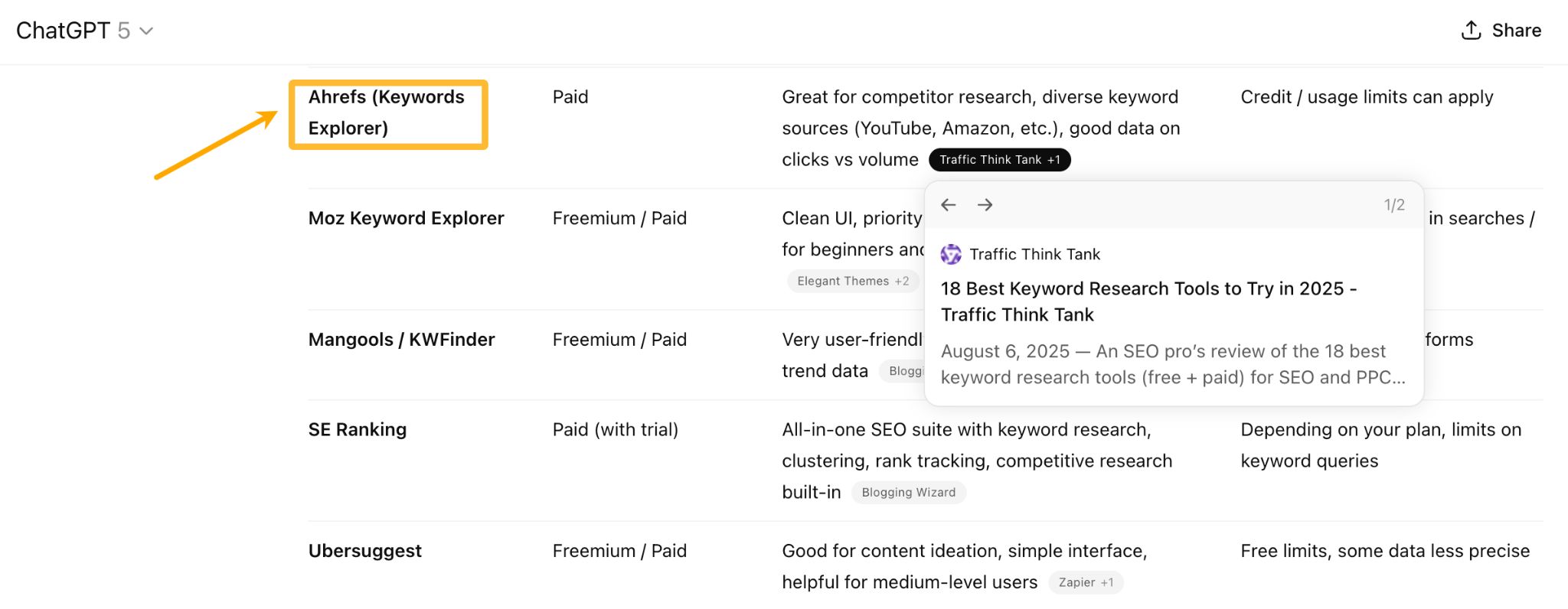

Mentions

The best of both worlds

They provide measurable, attributable traffic

They signal trust and authority

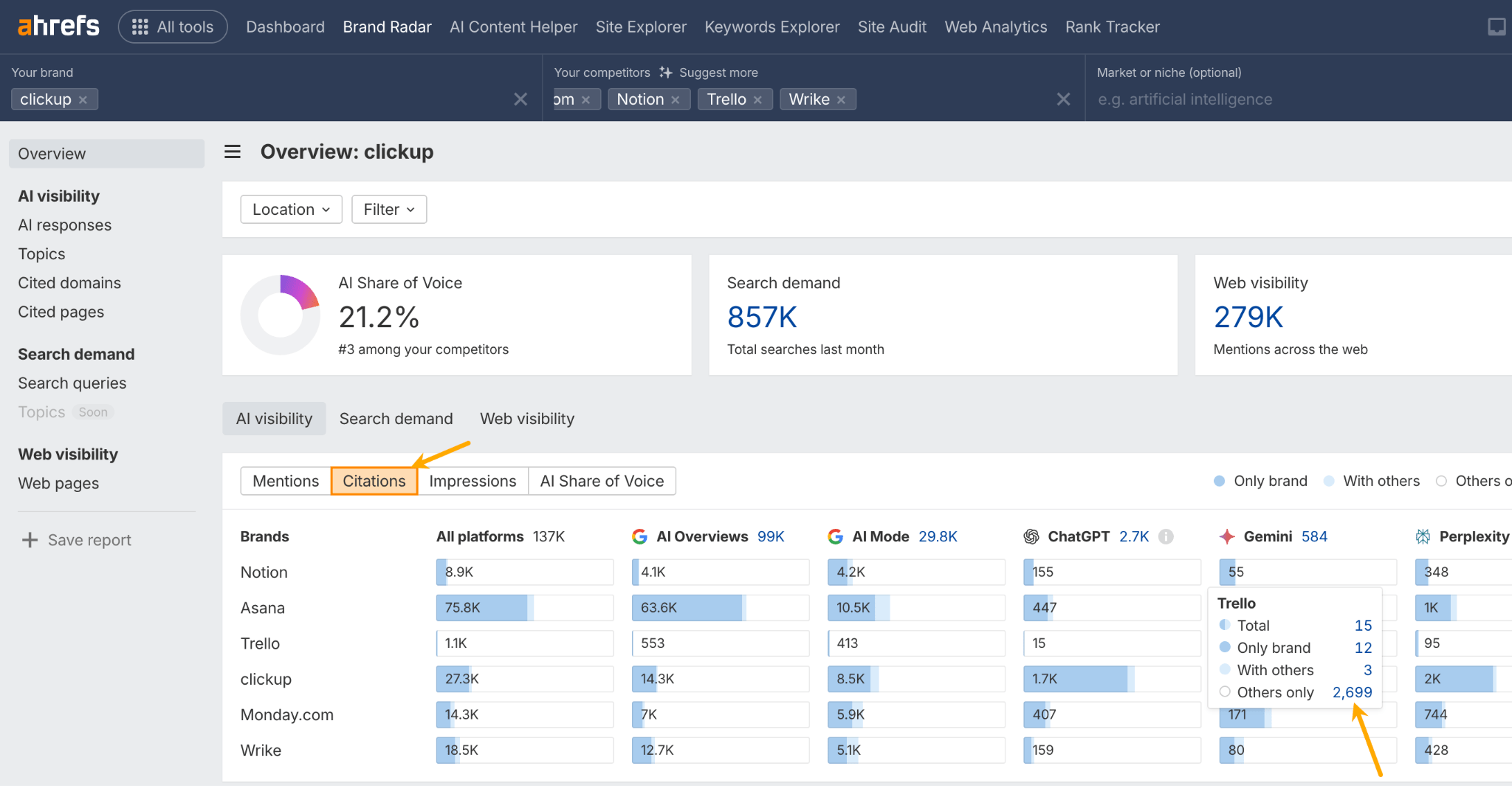

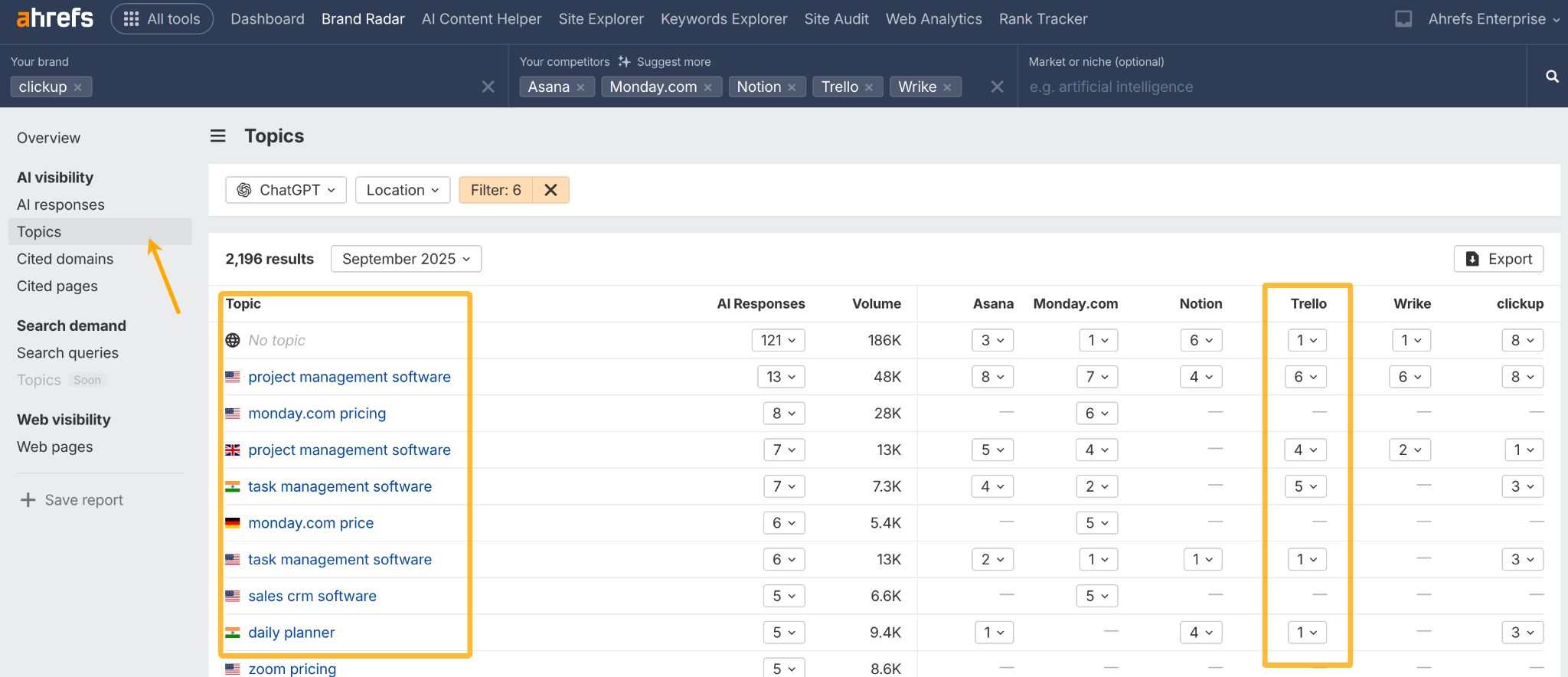

Data via Ahrefs Brand Radar.

Data via Ahrefs Brand Radar.They build brand recognition in a new marketing channel

Two sources of information

When LLMs search the web

Why you might get different citations for the same question

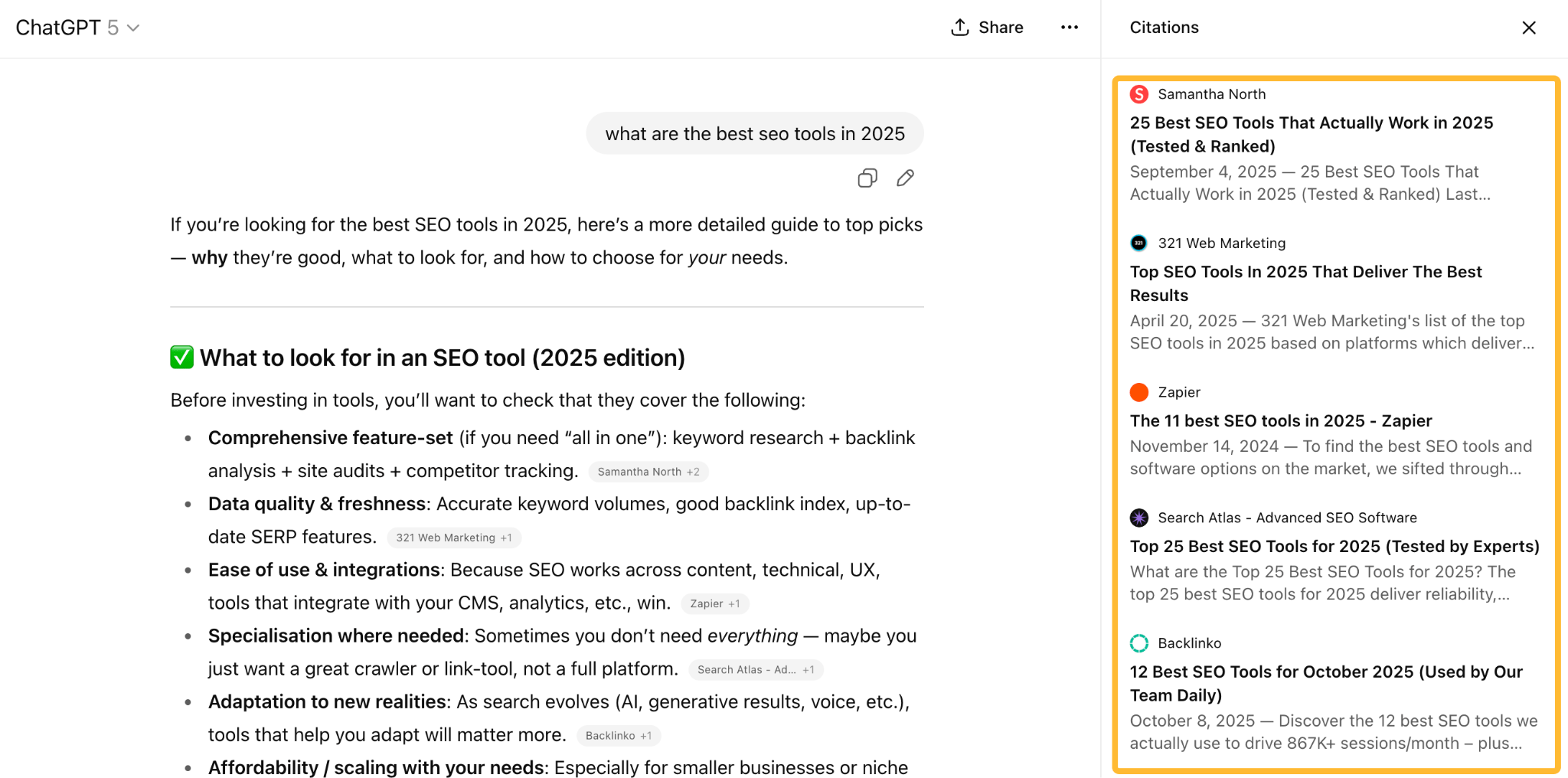

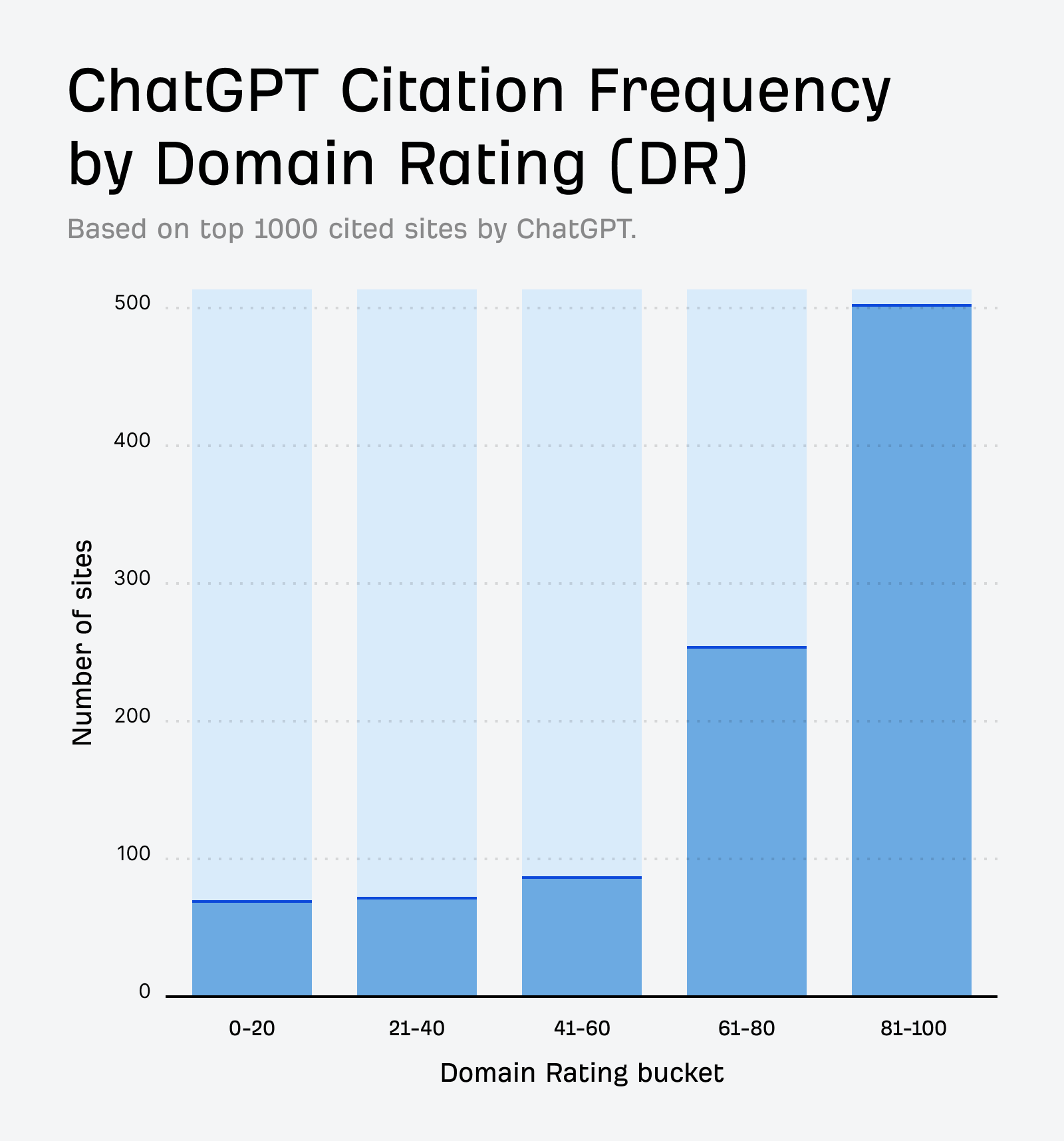

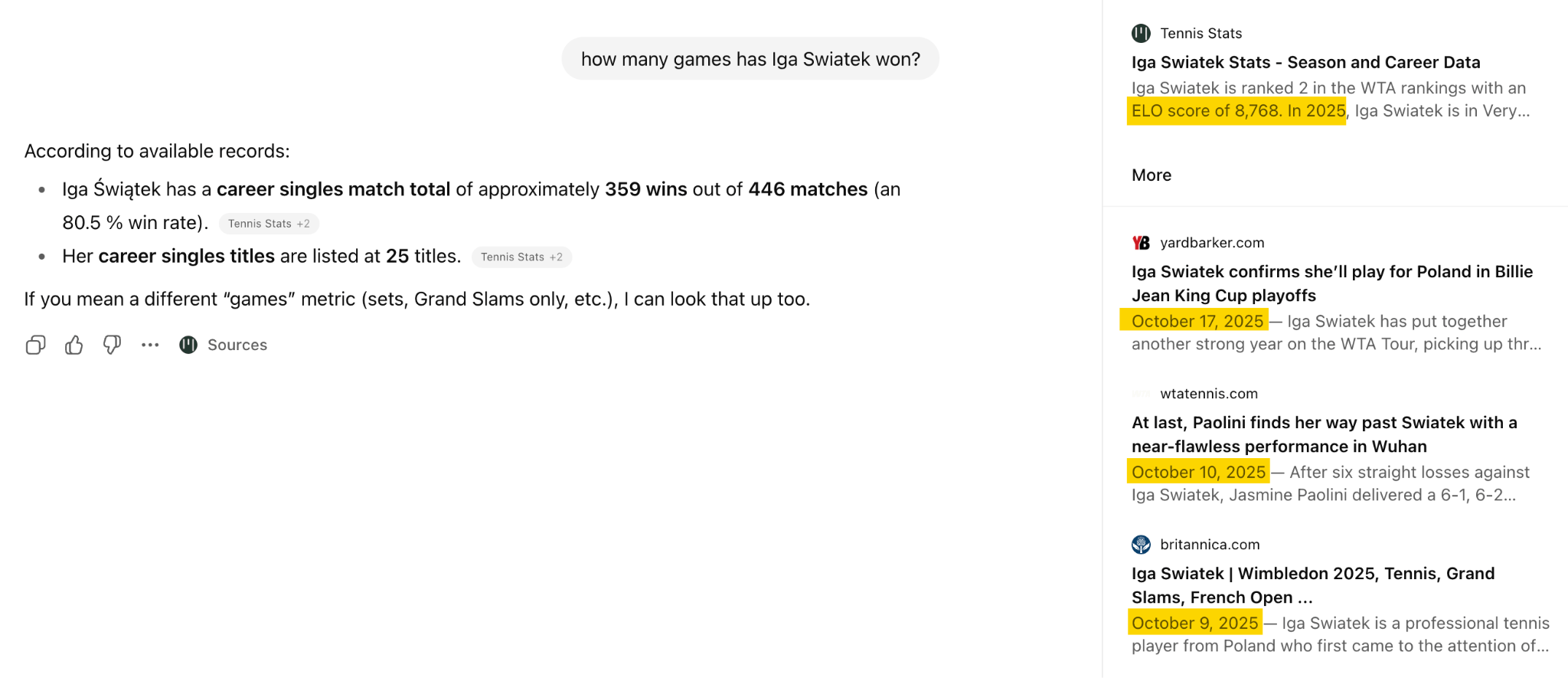

My question and ChatGPT’s answer with citations.

My question and ChatGPT’s answer with citations.  Same prompt asked a second time. Notice the difference in citations.

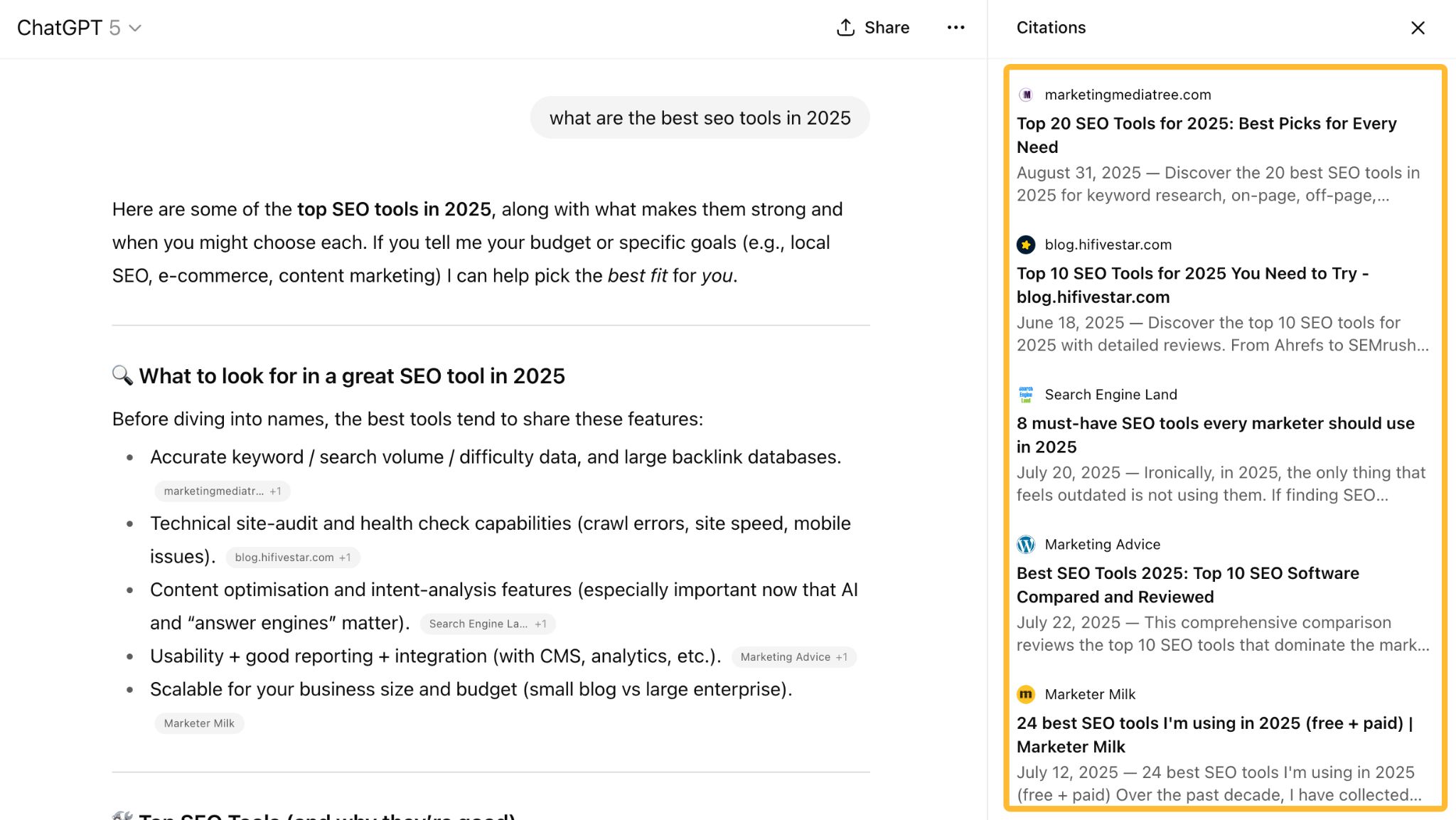

Same prompt asked a second time. Notice the difference in citations.Freshness is heavily weighted

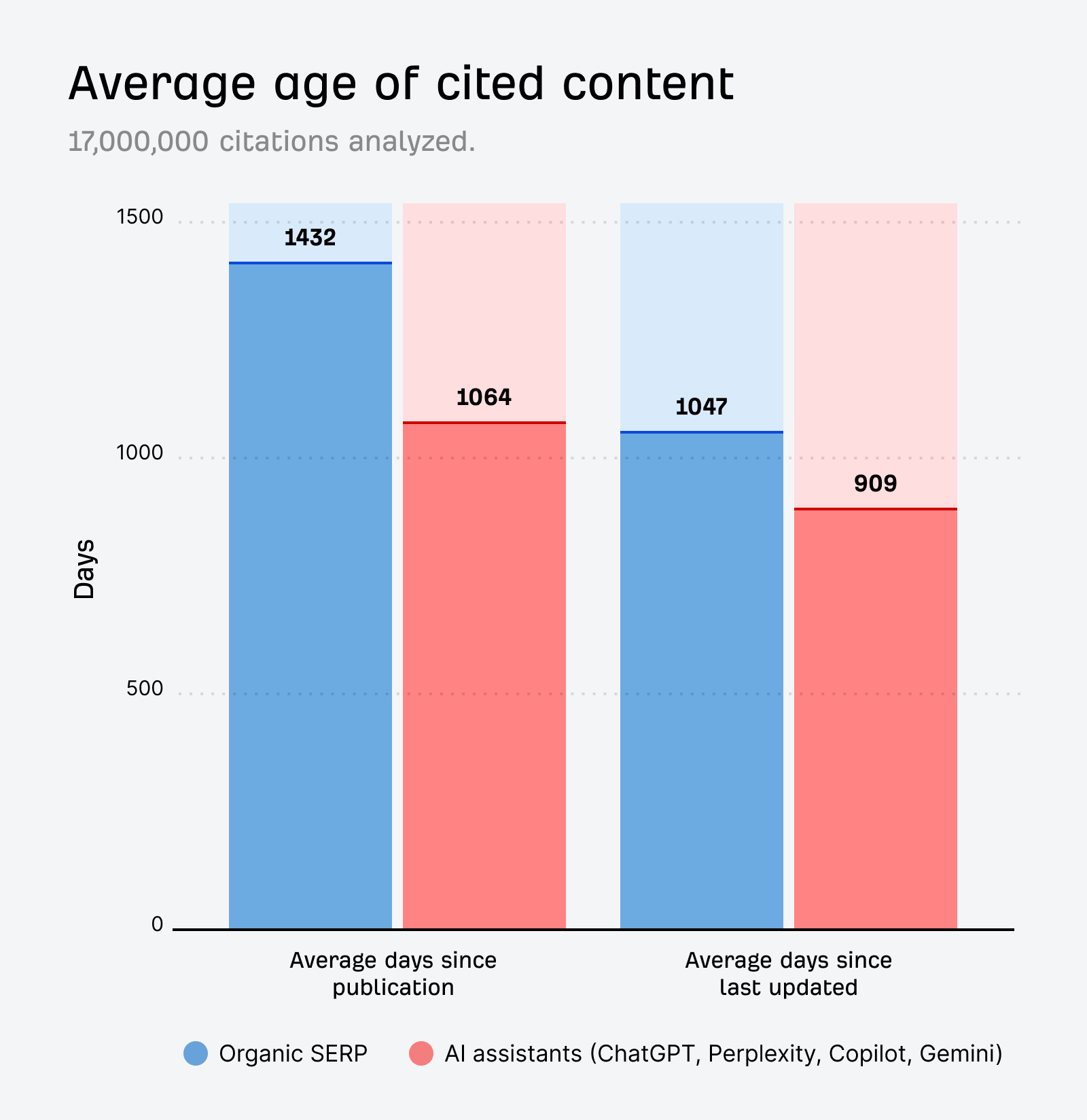

Domain authority matters (because ranking matters)

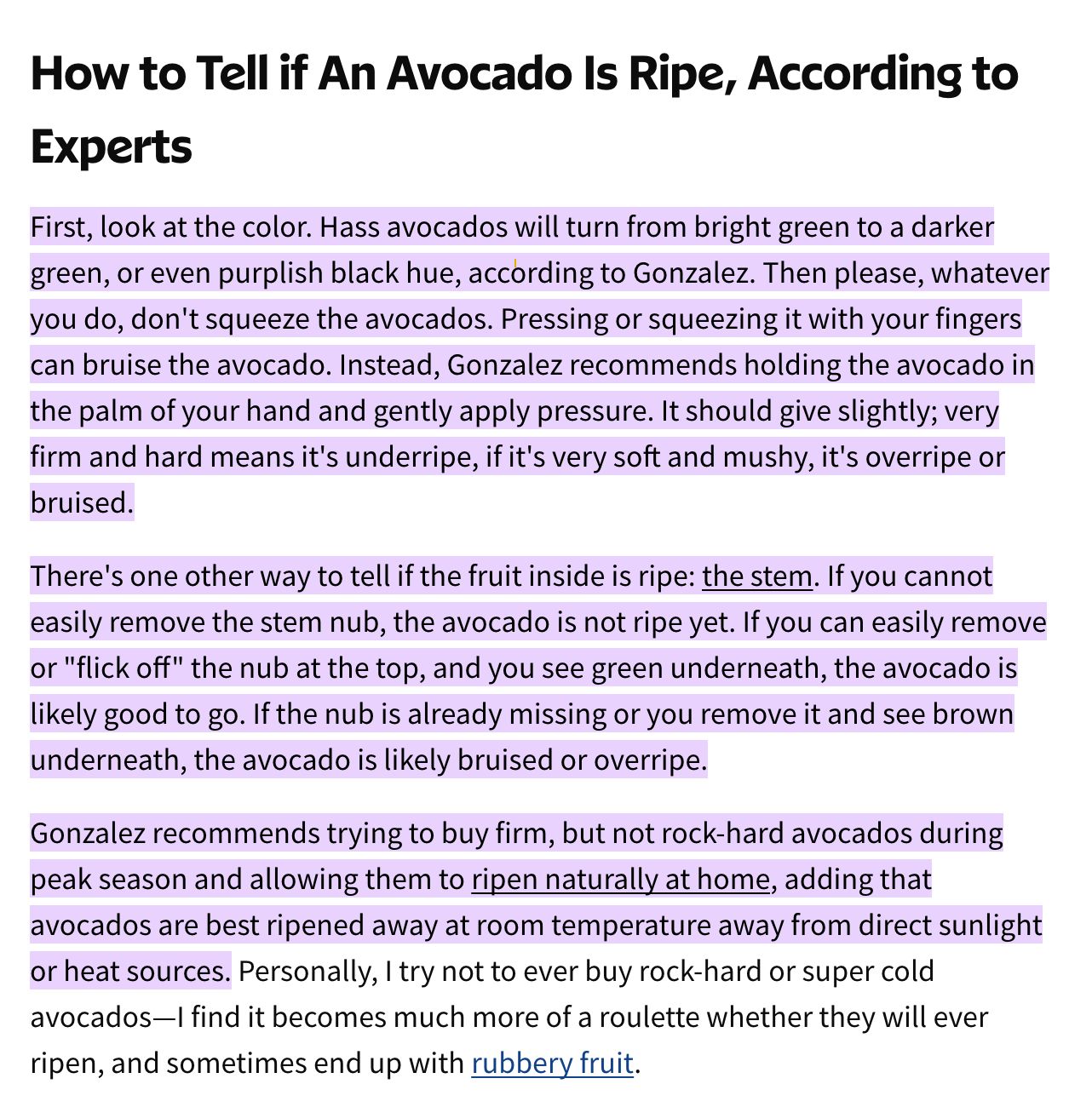

Semantic relevance to the query

Structured, extractable formatting

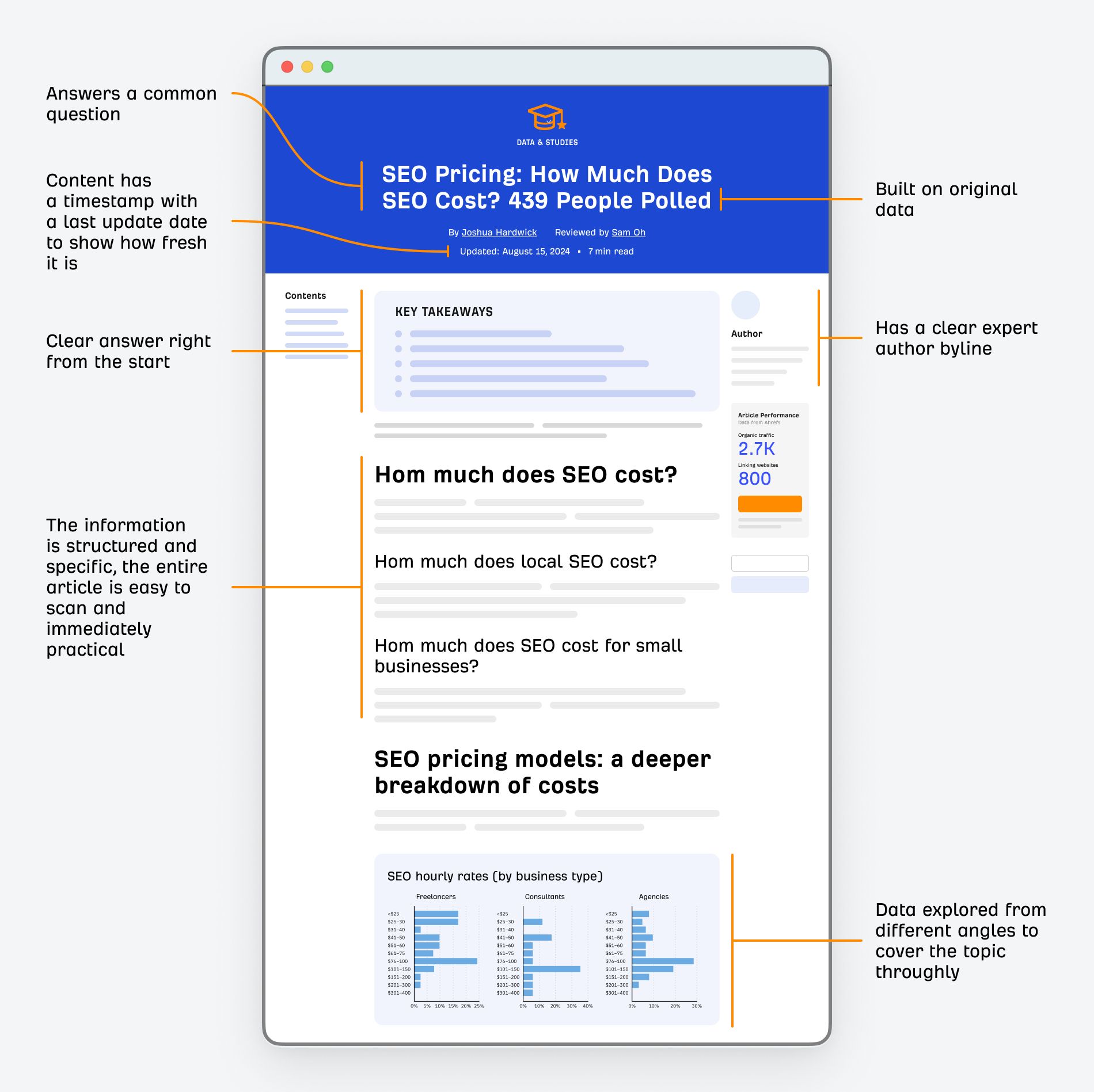

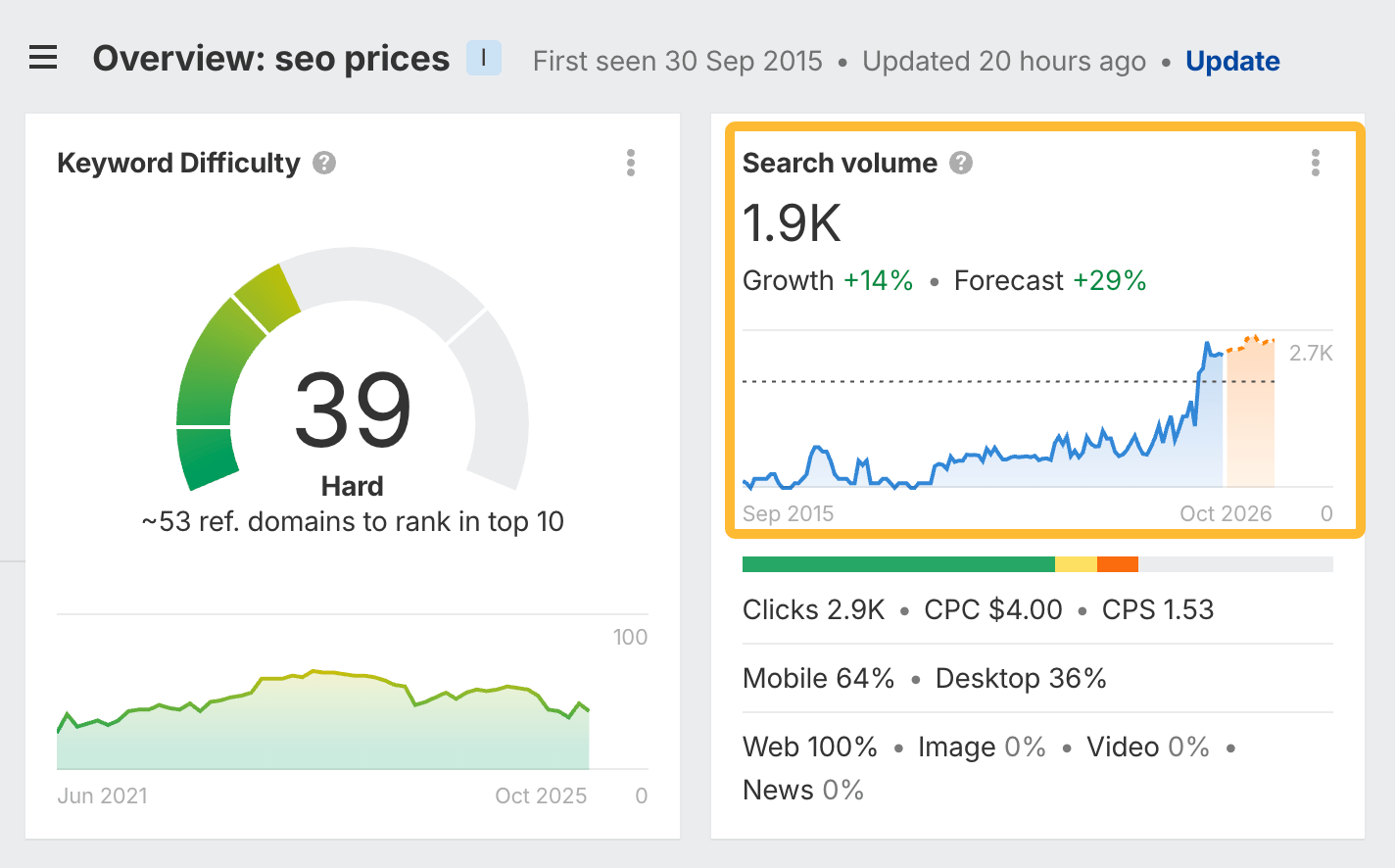

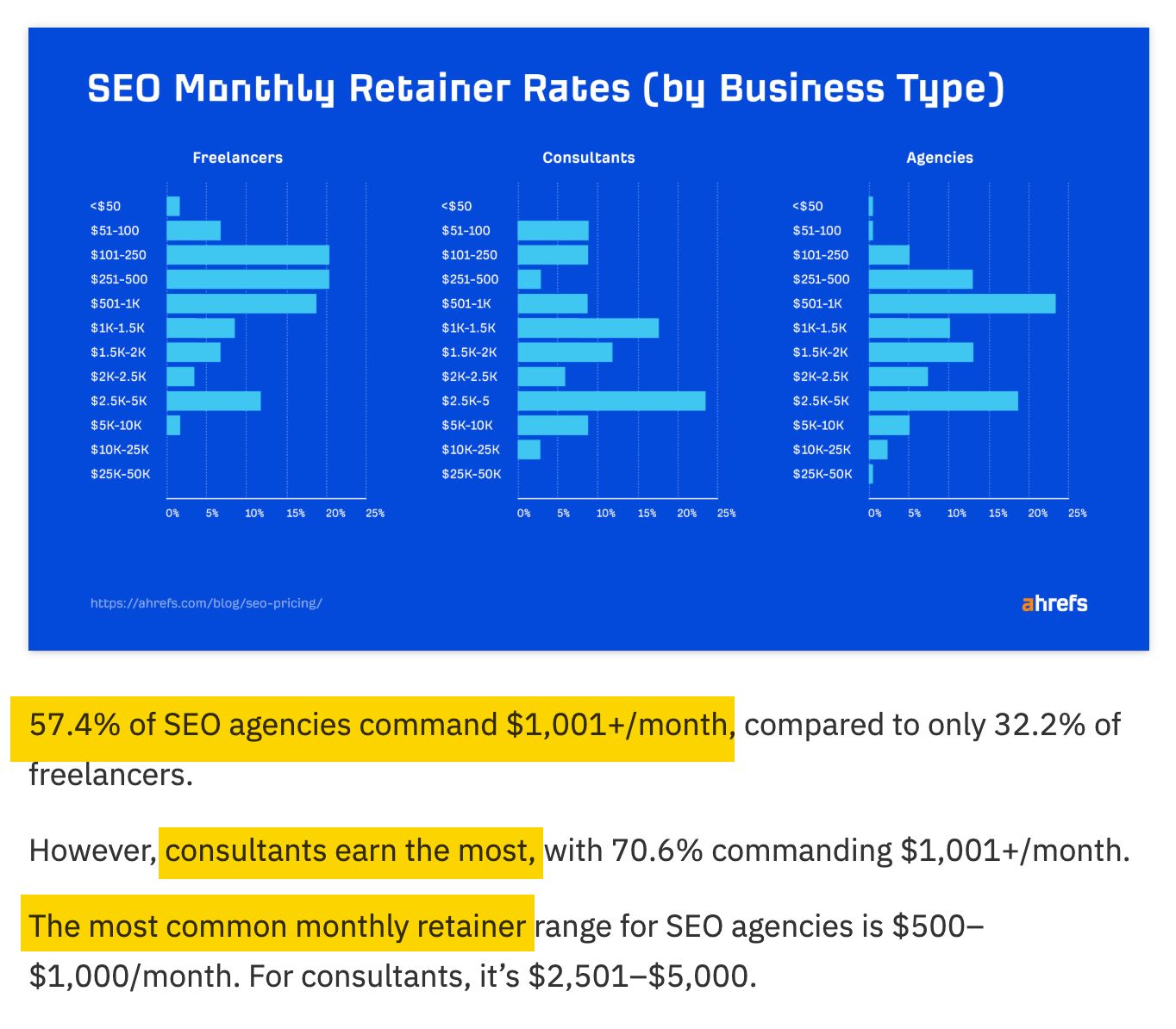

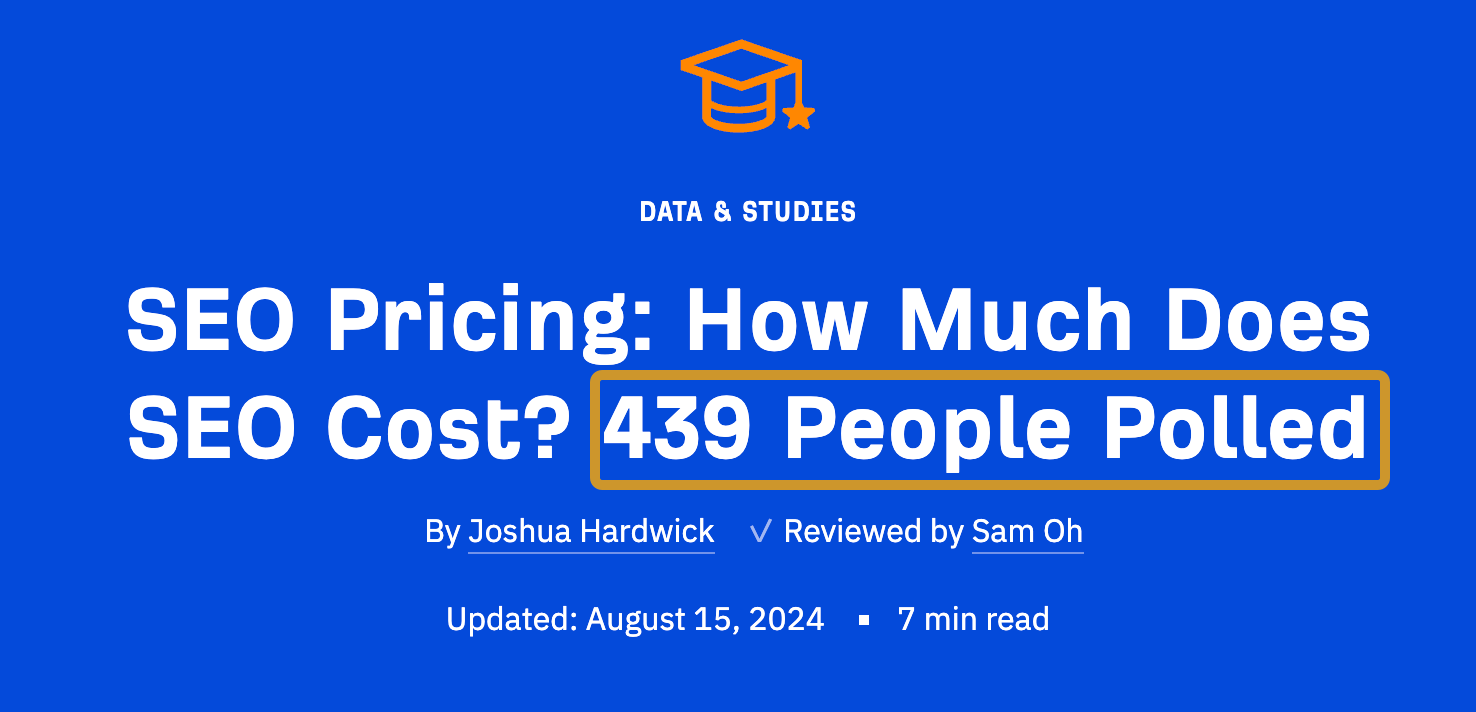

It answers a common question directly

It’s built on original data (with a timestamp)

The information is accessible, structured, and practical

The content is scannable

Explores the data from different angles to cover the topic

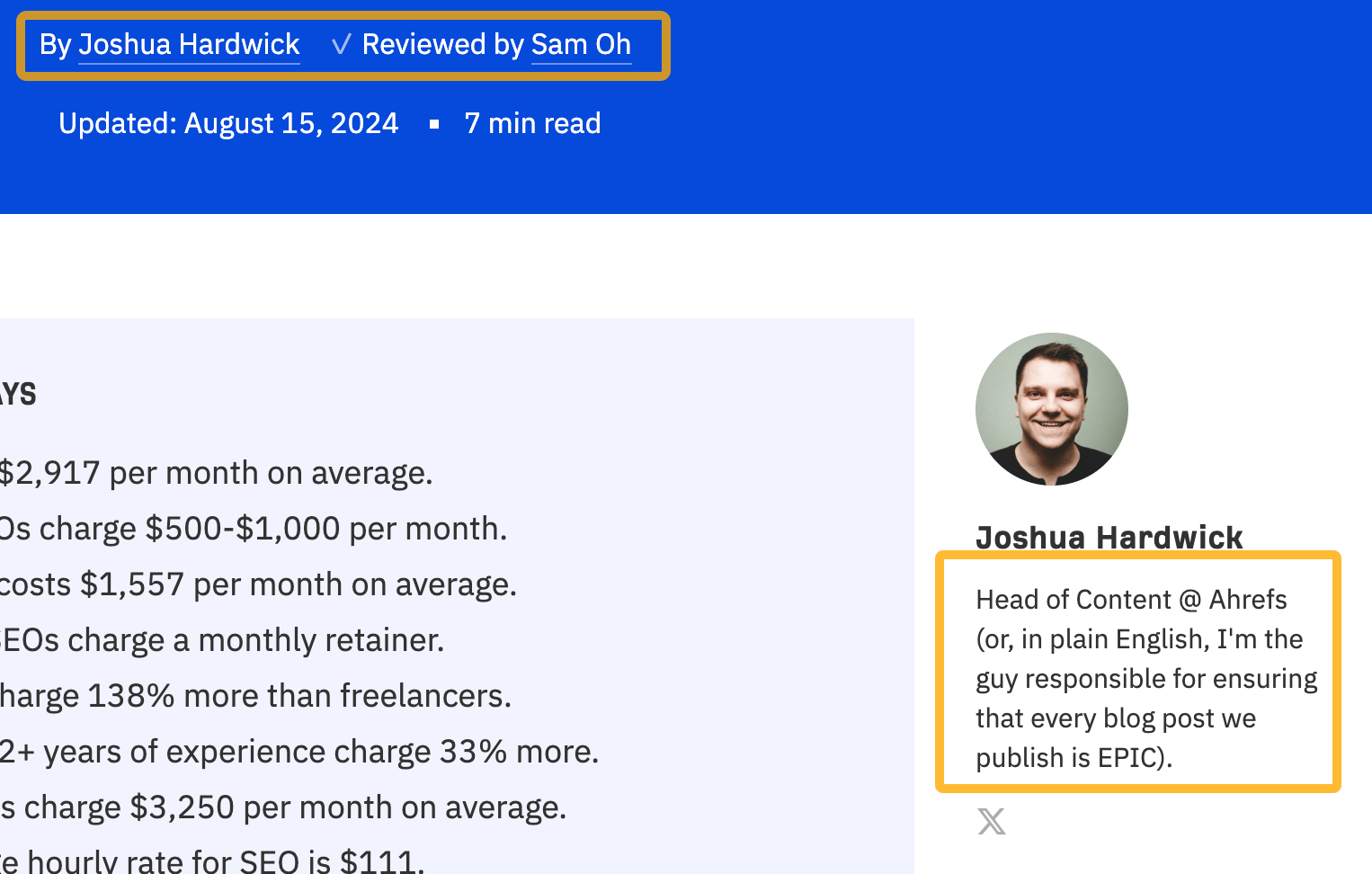

Clear expert author byline

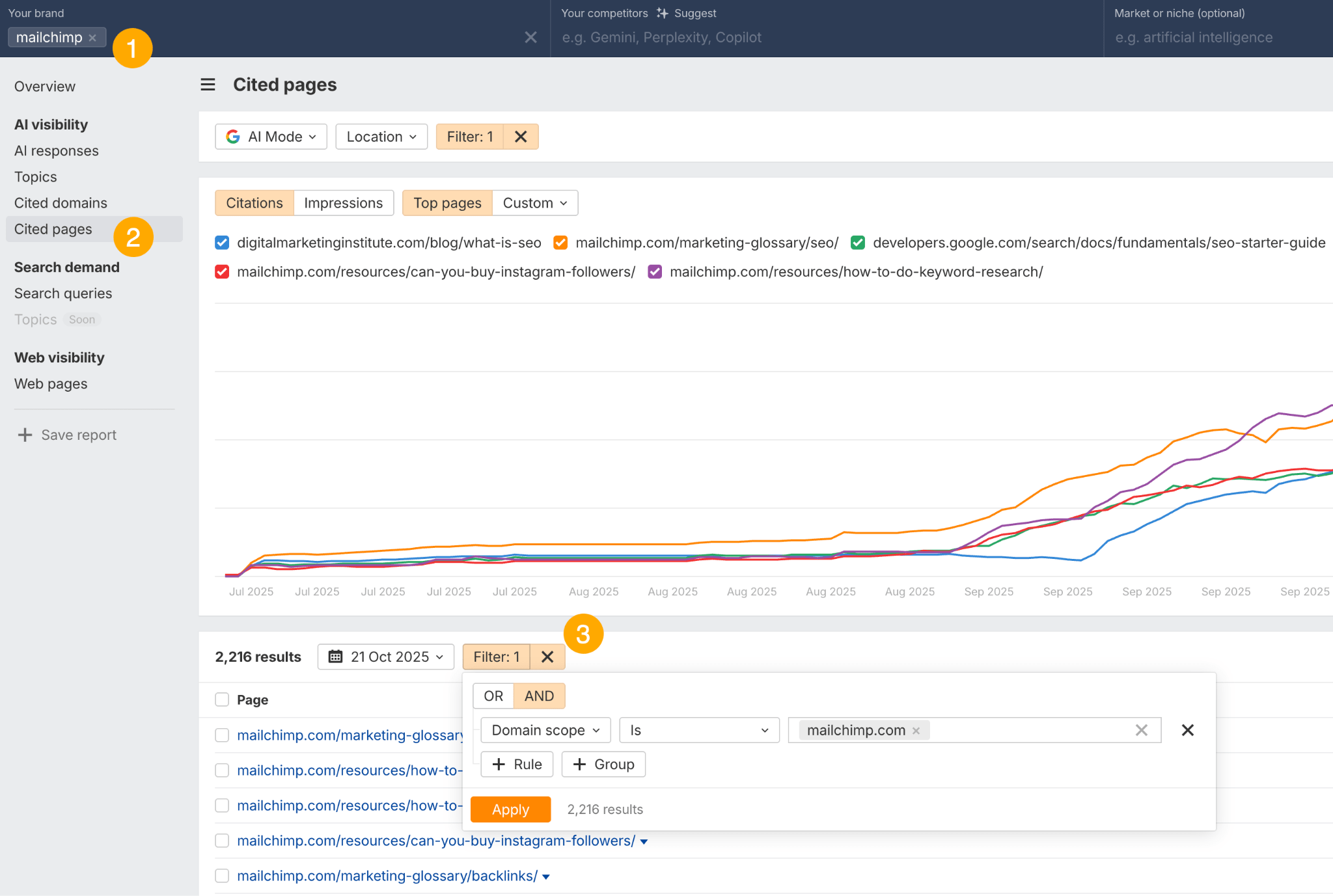

1. Identify what’s already getting cited in your niche

2. Find and fill citation gaps

3. Become the original source

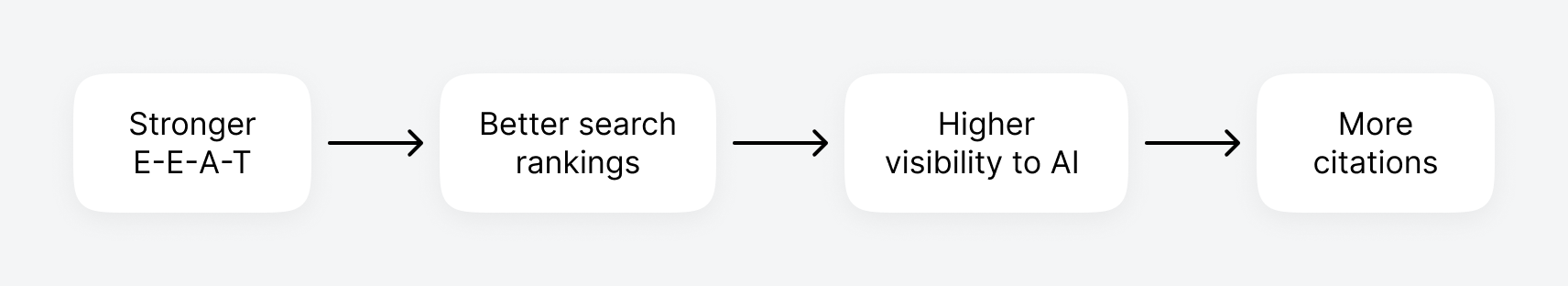

4. Optimize for EEAT to boost AI discoverability

5. Keep content updated and relevant

6. Follow SEO best practices when structuring content

7. Amplify your content to get more backlinks and mentions

Test prompts manually

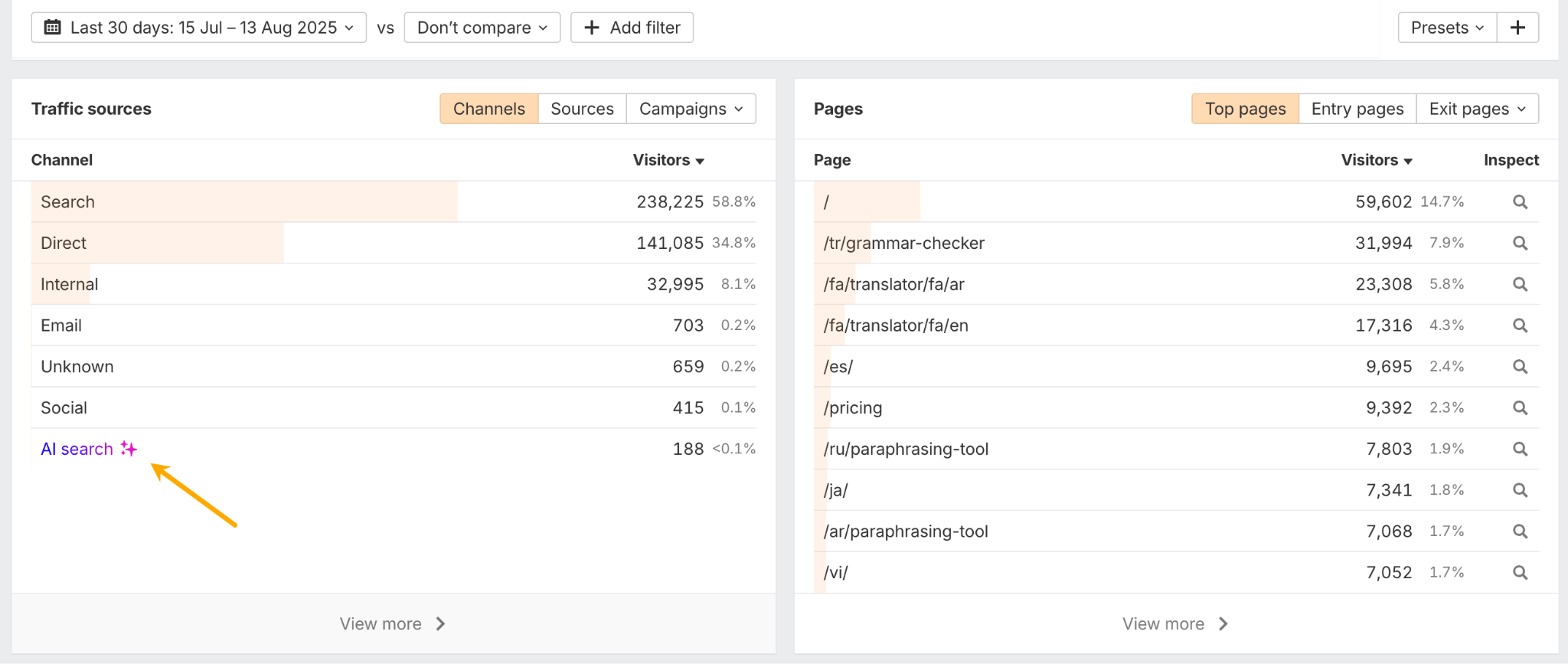

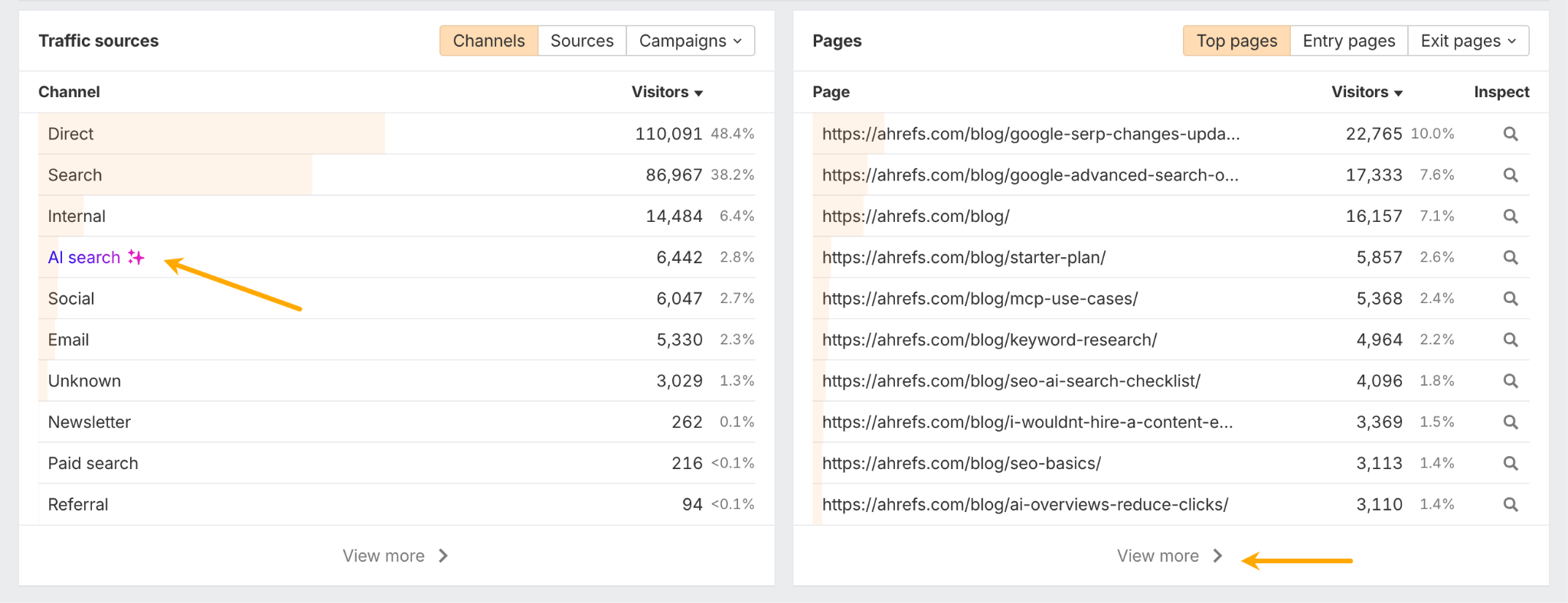

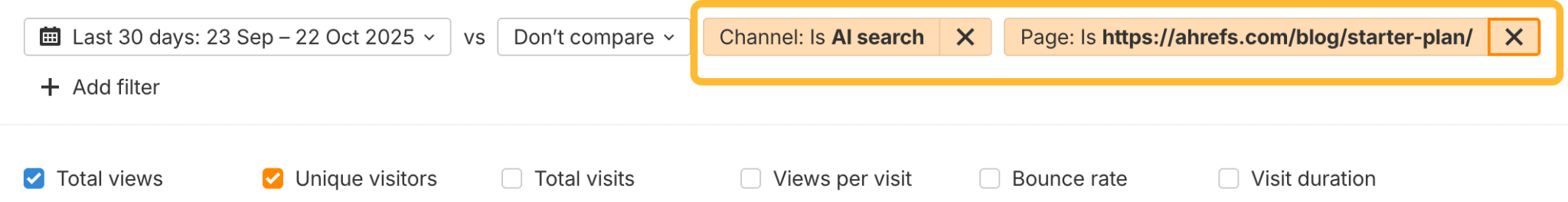

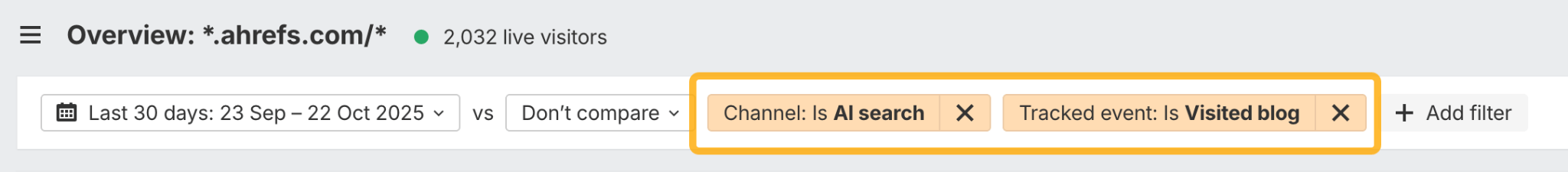

Monitor your website analytics

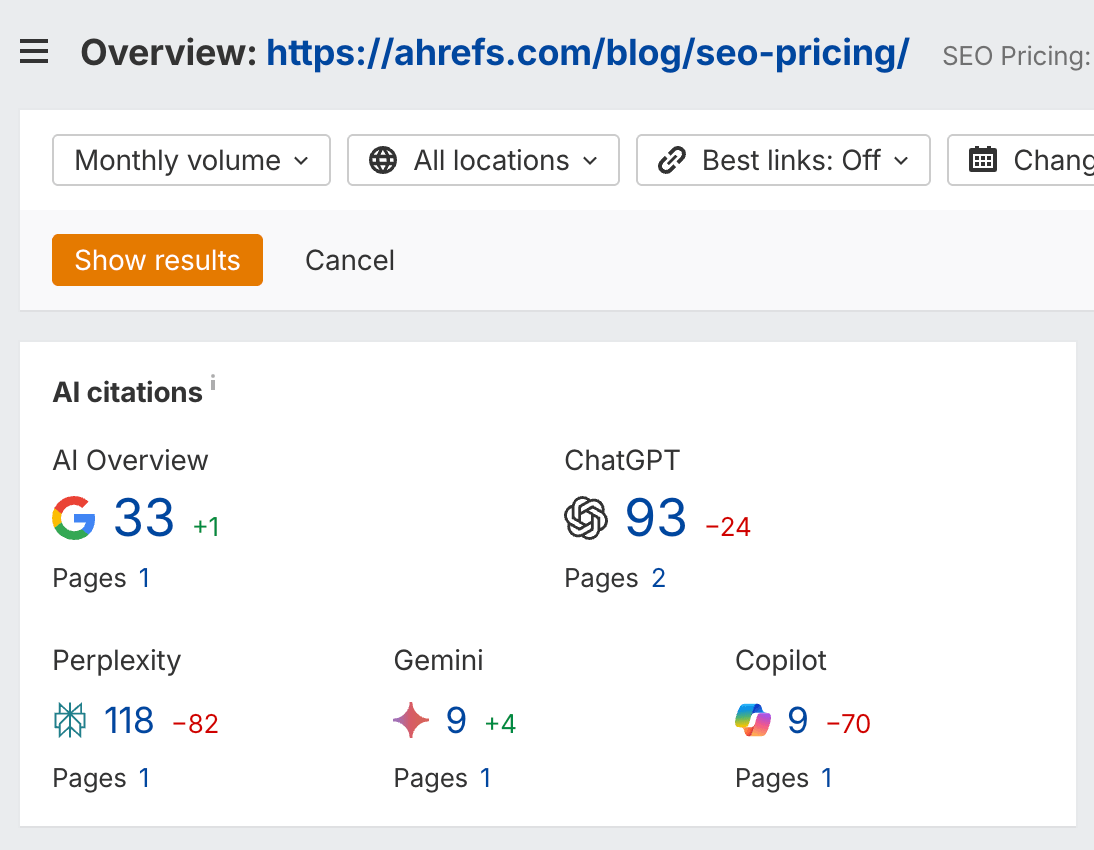

Paid monitoring at scale

Final thoughts

Kass

Kass