X Tests Collaborative AI-Powered Community Notes

AI will compose a note, then humans will be able to refine it.

Of all of Elon Musk’s many changes to the platform formerly known as Twitter, Community Notes remains the most interesting, and the integration of AI-generated Community Notes adds another valuable element to a process that can significantly reduce the spread of misinformation in the app.

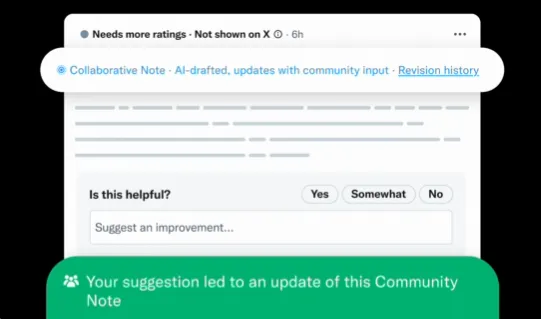

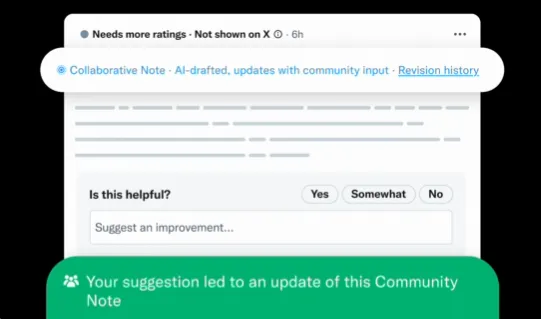

And this latest update to Community Notes is another interesting step, with X now testing out Collaborative Notes, which will make it easy for human Community Notes contributors to guide and improve AI-generated Notes.

As explained by X:

“When [a user] requests a note, AI drafts one, then the community refines it together through ratings and suggestions. You can watch it get better in real time.”

So rather than just relying on the AI response, and having a potentially flawed and/or misinterpreted AI answer as a fact-check, now, Community Notes contributors will be able to easily rate and respond to these AI notes, which could ensure that they’re updated with more relevant, accurate information.

That could help to address some key flaws with the notes system. A major problem with notes is the time that it takes for one to be displayed, because X is focused on real-time, in-the-moment discussion. That means that if it takes even 15 minutes for a note to be shown on a post, then at least some of its damage will be done, and it’s impossible to get notes up on these posts in real-time.

But AI helps to solve for this. AI-generated notes are only created when a user requests one, but they can be shown much faster, and the capacity to then refine these with similar speed, based on human ratings, could help to further reduce the impact of false claims.

It also assists in data gathering to support Notes, as the AI response will hopefully reference the relevant source material quickly, reducing the need for contributors to go through extensive work to provide relevant context.

Overall, it does seem like a helpful addition, but then again, the fact that the system is using Grok as its AI fact-check creator is also potentially problematic, because as Elon has repeatedly told us, he’s put in specific work to make Grok “less woke.” Which means that, on some of Musk’s pet issues, it’s likely to spread false information either way.

Indeed, Grok has been found to be promoting false information about past events, including a terrorist attack in France, while it’s also given incorrect information about the Holocaust, and about Musk himself.

So there may be some errors in the full AI process behind Community Notes, but as an isolated component within the Community Notes architecture, it seems like it could provide some benefit.

Really, you shouldn’t blindly trust any AI-generated response, as these are powered by the internet, and the internet has a lot of incorrect info polluting its sources. That’s especially true when X posts are the source data, but it’s another interesting element to consider in trying to create a better fact-checking process.

Aliver

Aliver