Are AI Mode and AI Overviews Just Different Versions of the Same Answer? (730K Responses Studied)

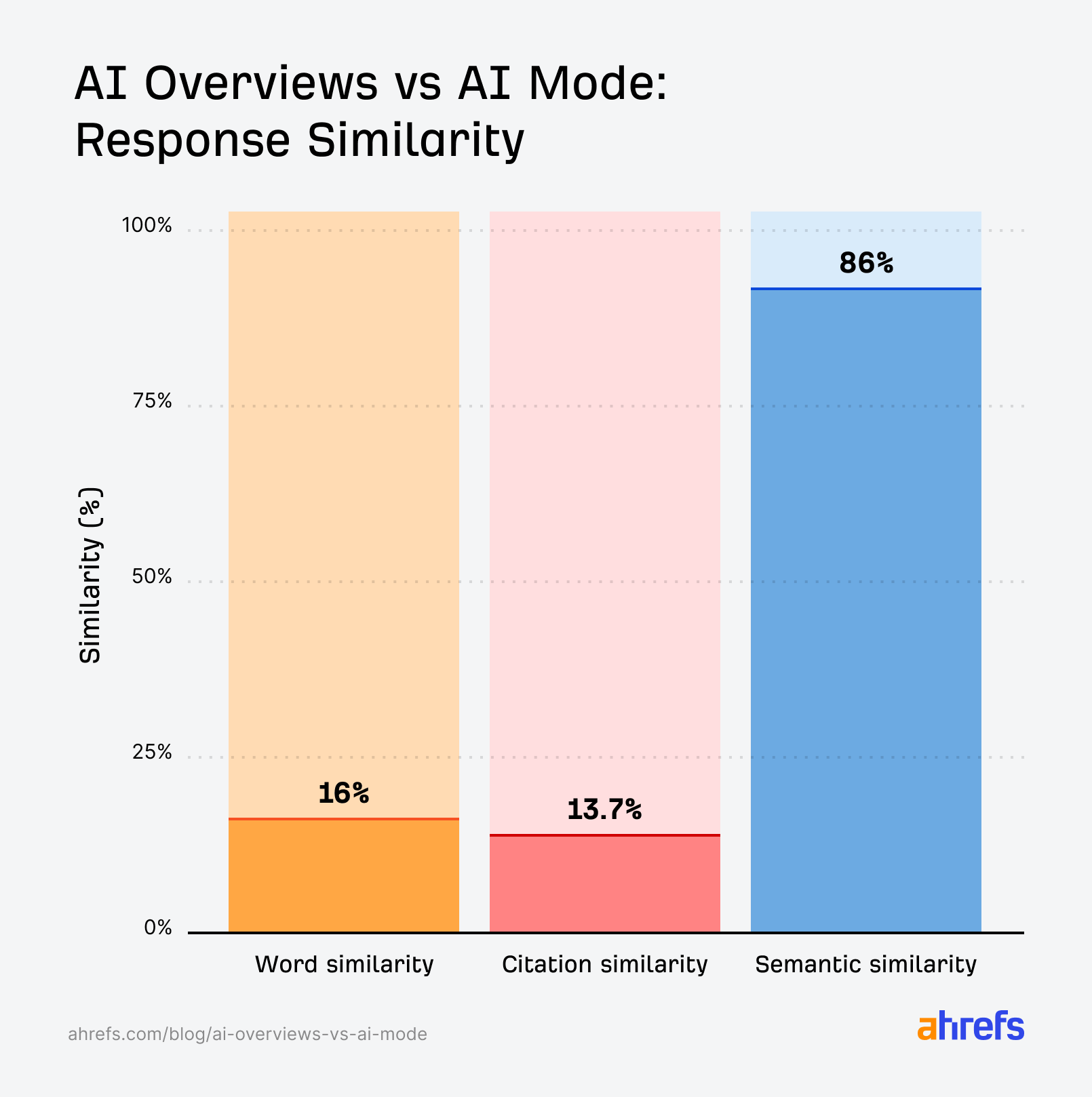

But after analyzing 730,000 response pairs, we found something unexpected: AI Mode and AI Overviews reach very similar conclusions (86% semantic similarity) while citing different sources (only 13.7% citation overlap). This matters because it suggests these aren’t just a...

Article Performance

Data from Ahrefs

The number of websites linking to this post.

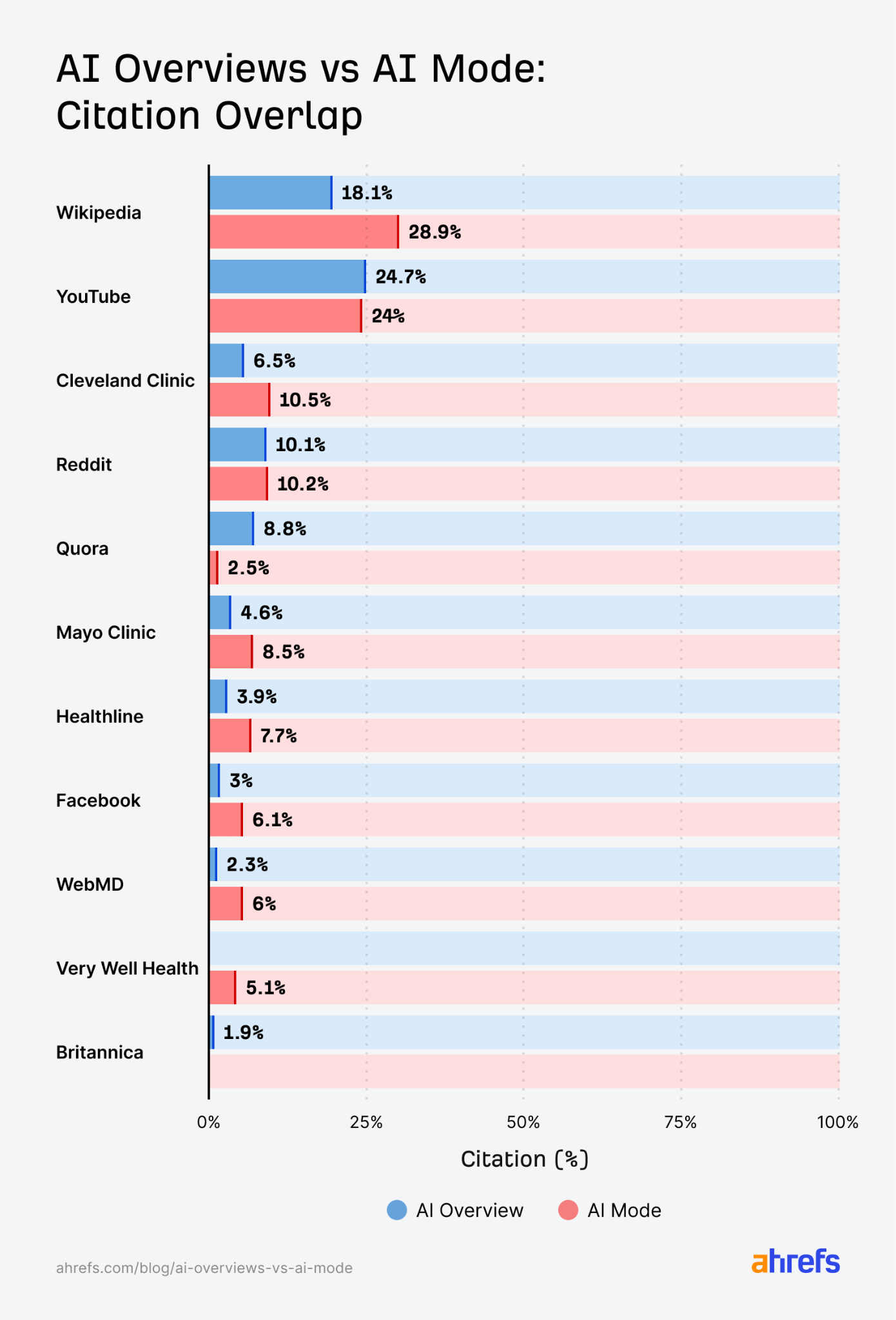

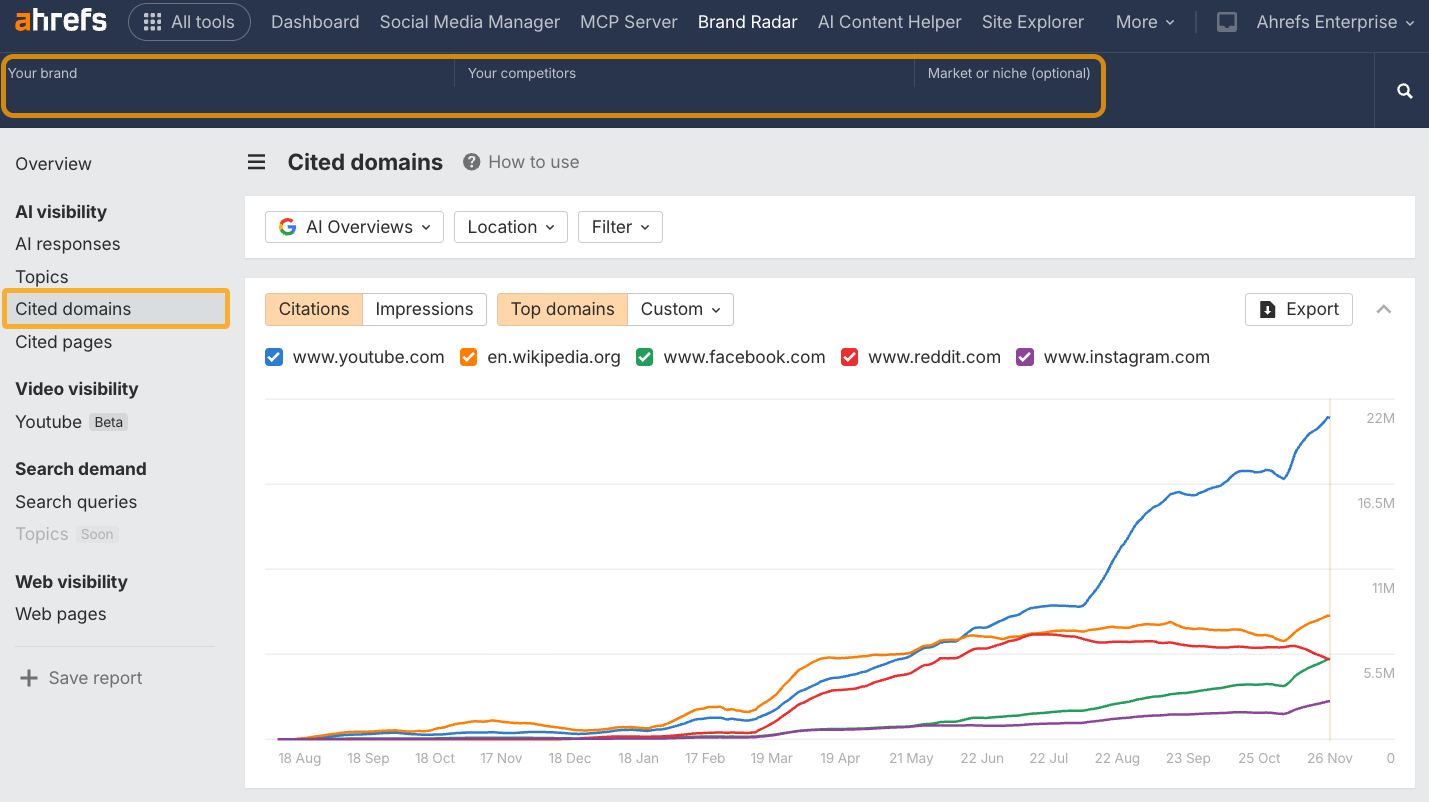

This post's estimated monthly organic search traffic.

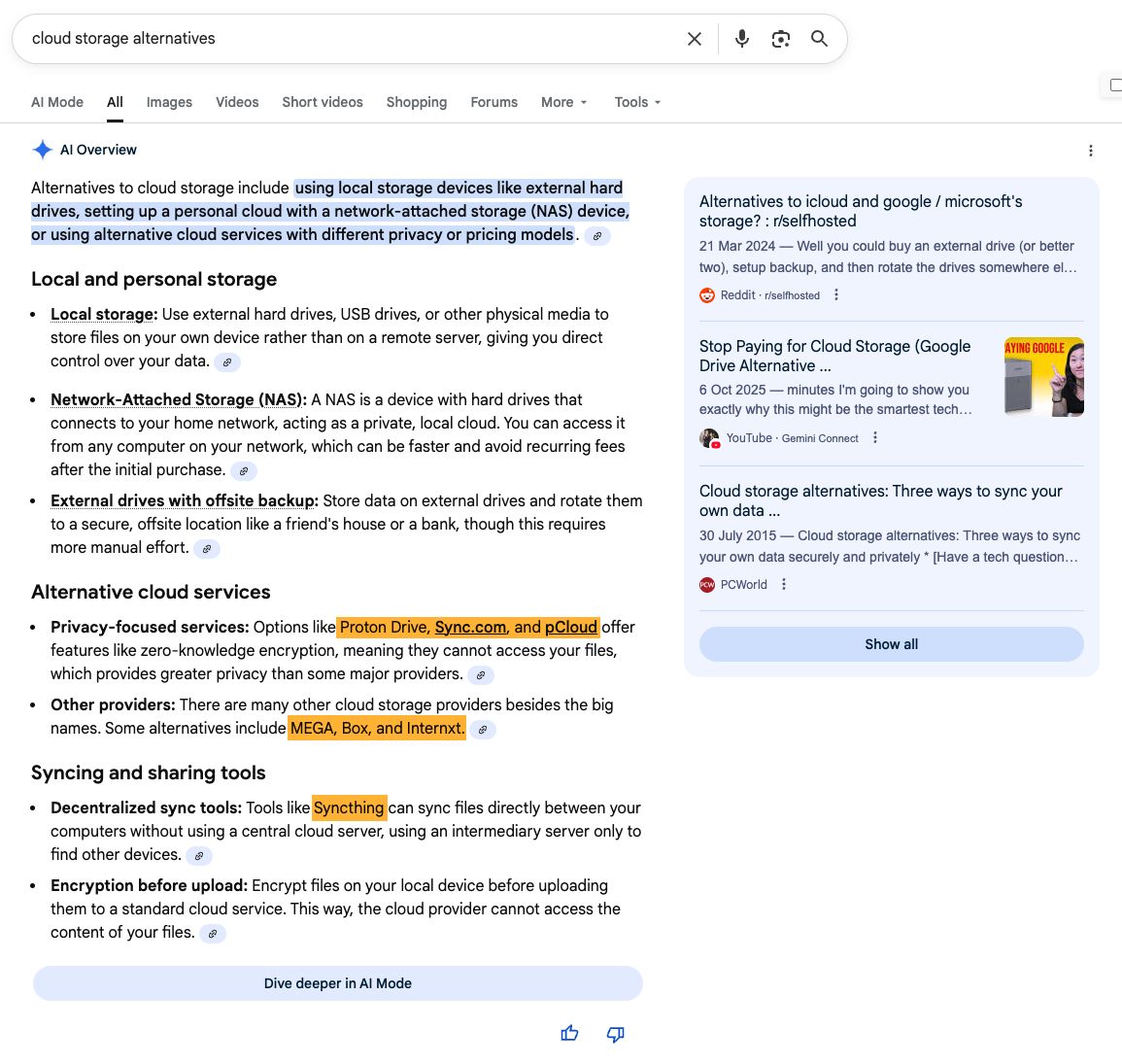

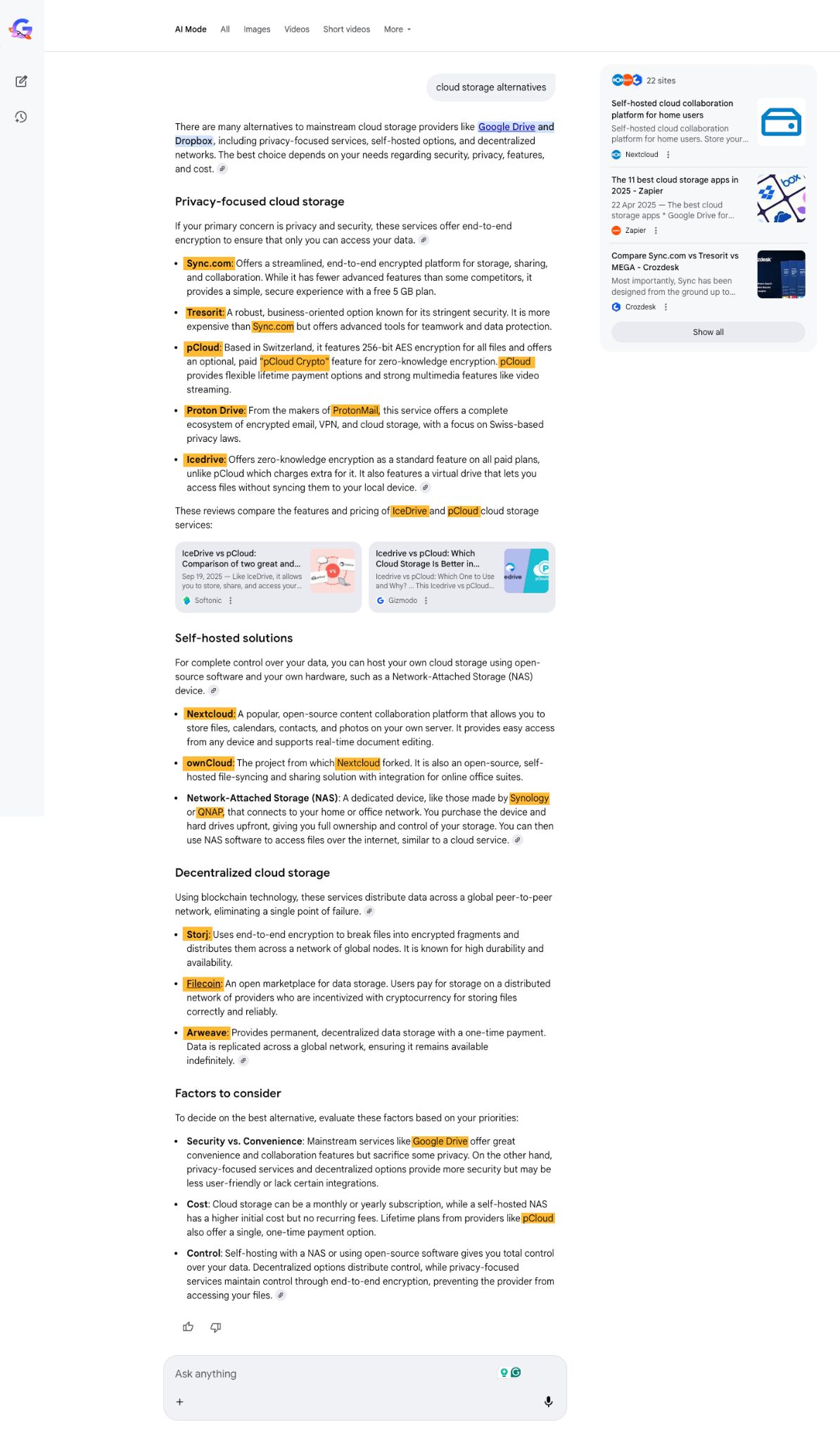

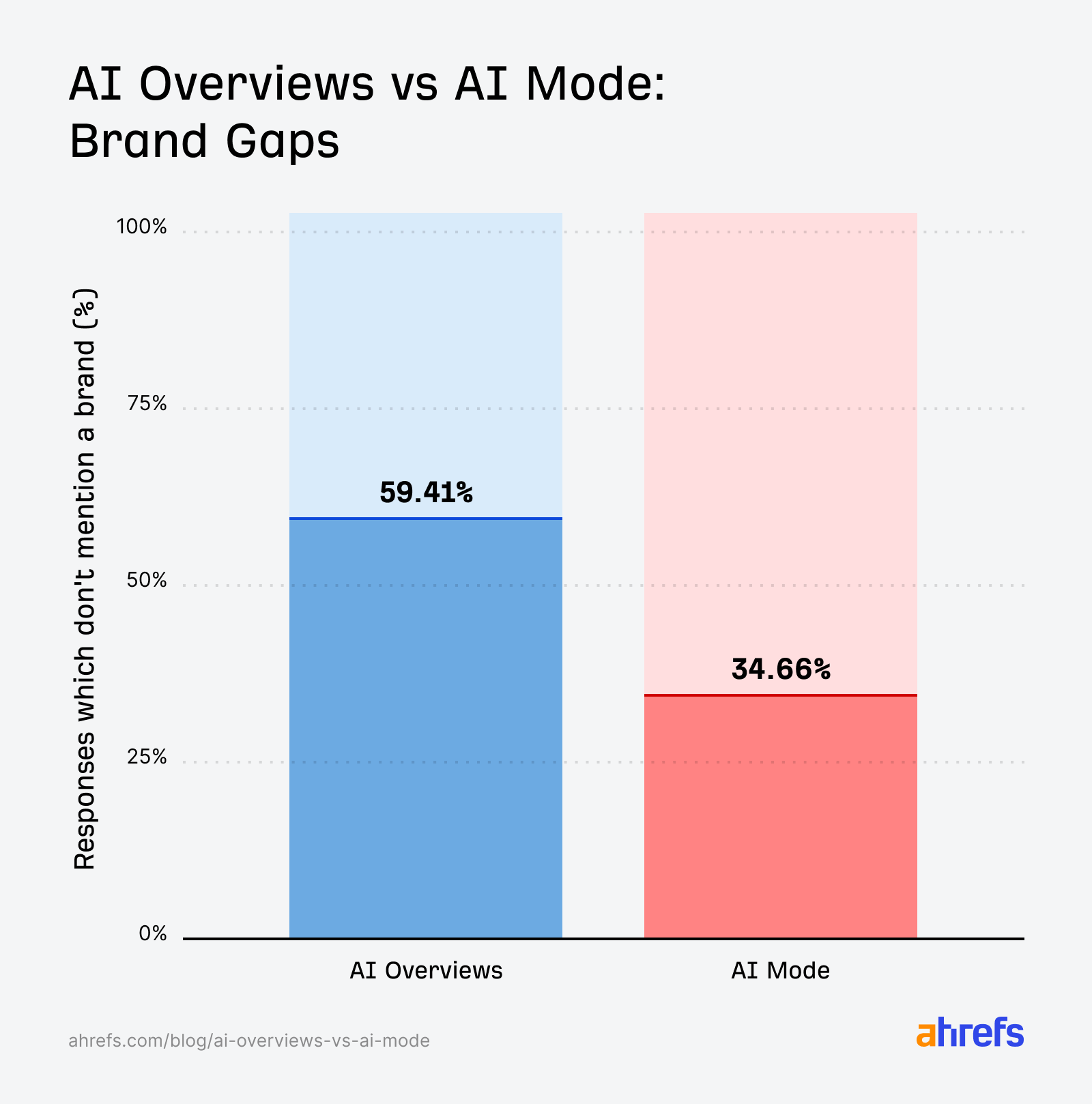

Google’s AI Mode generates responses that are 4x longer than AI Overviews (on average). When we first noticed this, the natural assumption was that AI Mode simply expands on the same information, taking AI Overview’s concise answer and adding more detail from the same sources. But after analyzing 730,000 response pairs, we found something unexpected: AI Mode and AI Overviews reach very similar conclusions (86% semantic similarity) while citing different sources (only 13.7% citation overlap). This matters because it suggests these aren’t just a “short version” and “long version” of the same answer. They’re two distinct systems that happen to converge on similar conclusions through different paths. For marketers and SEO professionals, this raises critical questions: We analyzed citation patterns, content similarity, and entity mentions across hundreds of thousands of queries to find out. Here’s what we discovered. We analyzed September 2025 US data from Ahrefs’ Brand Radar, examining 540,000 query pairs for citation and URL analysis, and 730,000 query pairs for content similarity analysis. For each query, we captured both an AI Mode response and an AI Overview response. We measured citation overlap by identifying how many URLs appeared in both AI Mode and AI Overview responses for the same query. We also tracked domain preferences to see which websites each platform cited most frequently. For content similarity, we used two metrics: Jaccard similarity to measure word-level overlap (calculated as unique words in common divided by all unique words), and cosine similarity to measure semantic overlap on a scale from 0 (completely different) to 1 (identical meaning). We also analyzed entity overlap by counting how many people, organizations, and brands were mentioned in both responses. Sidenote. This analysis compares single generations of AI Mode and AI Overview responses. Our previous research showed that 45% of AI Overview citations change between generations. This means the citation pools available to each system may overlap more than our single-snapshot comparison suggests. However, the low overlap we observed (13.7%) indicates that even when multiple sources could support an answer, AI Mode and AI Overviews often select different ones in practice. AI Mode and AI Overviews cited the same URLs only 13.7% of the time. When we looked at just the top 3 citations from each, the overlap was slightly higher at 16.3%. This means that 87% of the time, these two systems are pulling from completely different sources to answer the same query. The domain preferences also differed between the two systems: The most notable differences: AI Mode appears to lean more heavily on encyclopedic and detailed medical sources as it builds longer responses, while AI Overviews show a stronger preference for video content and community-driven platforms like Reddit. However, both systems also showed similar preferences for content types: AI Overviews cited videos and core pages (like homepages or main category pages) nearly twice as often as AI Mode, while both systems overwhelmingly preferred article-format content. Try this yourself You can see what sources AI Overviews and AI Mode cite the most in Ahrefs’ Brand Radar. Run a blank search and check the Cited domains report. Filter by AI Overview and AI Mode separately to see their preferred sources. You can also filter for your target topics or specific brands if you want to analyze a more specific segment of the data. Check out our guide to the best filter combinations to try. AI Mode and AI Overview responses had a Jaccard similarity score of just 0.16, meaning only 16% of the unique words overlapped between the two responses for the same query. They started with the exact same first sentence only 2.51% of the time, and produced identical responses in just 0.51% of cases. This tells us that AI Mode isn’t simply taking AI Overview’s answer and adding more detail to it. Instead, both systems appear to research the query independently and formulate entirely new responses with minimal word or citation overlap. Even when they agree on the answer (which they do 86% of the time semantically, as we’ll see next), they’re expressing it in fundamentally different ways. Here’s where things get interesting: despite low word overlap (16%) and minimal citation overlap (13.7%), AI Mode and AI Overview responses achieved a semantic similarity score of 86% on average. We measured this using cosine similarity. On a scale where 1.0 means “identical meaning” and zero means “completely unrelated,” nearly 90% of response pairs (89.7%) scored above 0.8, indicating strong semantic alignment. Put simply: 9 out of 10 times, AI Mode and AI Overview agreed on what to say. They just said it differently and cited different sources. There are many possible reasons why this can happen. However, Google’s documentation confirms that both AI Mode and AI Overviews use “query fan-out” to help display a wider and more diverse set of helpful links. Query fan-out is a process that runs multiple related searches to find supporting content while responses are being generated. Since AI Mode and AI Overviews use different models and techniques, they can easily cite different sources even when reaching similar conclusions. This explains why they agree on what to say while disagreeing on where they found it. Think of it like two experts answering the same question. They might use completely different words and reference different studies, but if they’re both knowledgeable about the topic, their answers will convey the same core information. That’s what’s happening here; the systems are drawing from a consistent understanding of each topic, even as they express it differently. AI Mode responses are roughly 4x longer than AI Overviews and mention significantly more people and brands (3.3 entities on average compared to AI Overviews’ 1.3). For example, looking at the keyword “cloud storage alternatives”, the AI Overview mentions seven brands only once each, and toward the end of the response: Whereas the AI Mode response includes 23 brand mentions, repeating some brand mentions throughout the reply. Also, every component of the response contains a brand, including the very first sentence: It’s easy to think that since AI Mode answers are 4x longer, that’s naturally why they also include more brands in absolute terms. But it’s often the case that AI Overviews is more selective with whether it mentions brands, limiting the amount of brand exposure available within the response itself. But here’s what’s perhaps most interesting: 61% of the time, AI Mode includes every entity that AI Overview mentioned, then adds more on top. For example, an AI Overview might mention Mayo Clinic as a health authority. AI Mode’s response includes Mayo Clinic but also adds Cleveland Clinic and WebMD. The core authority appears in both, but AI Mode expands the expert pool. This means that if your brand is mentioned in AI Overviews, there’s a good chance it’ll also appear in AI Mode’s longer response. But you’ll be sharing space with additional competitors or sources that didn’t make the AI Overview cut. This pattern suggests AI Mode is building on a similar foundation of related entities as AI Overviews rather than starting from scratch with an entirely different approach. Not every response includes citations or brand mentions. For instance, 59.41% of AI Overview and 34.66% of AI Mode responses contain no brands or entities. About one-third (32.8%) of all AI responses in our dataset mentioned no brands or people at all. These are usually informational queries where no brand is searched for or expected in the response, such as “Nov 21 zodiac”, “revenue cycle”, and “meditation before bed”. Some responses also showed no cited sources. When citations are missing entirely, it’s typically for edge cases like: AI Mode is more reliable for attribution. Only 3% of responses lack citations compared to 11% for AI Overviews. A few possible reasons: These distinctions between AI Mode and AI Overviews have direct implications for how you approach AI optimization. The bottom line: treat AI Mode and AI Overviews as separate channels with overlapping goals but different execution. Optimize for both, but don’t assume success in one translates to the other.

Why the difference in citation rates?

Fransebas

Fransebas